C/C++教程

Kafka成长记2:Producer核心组件分析

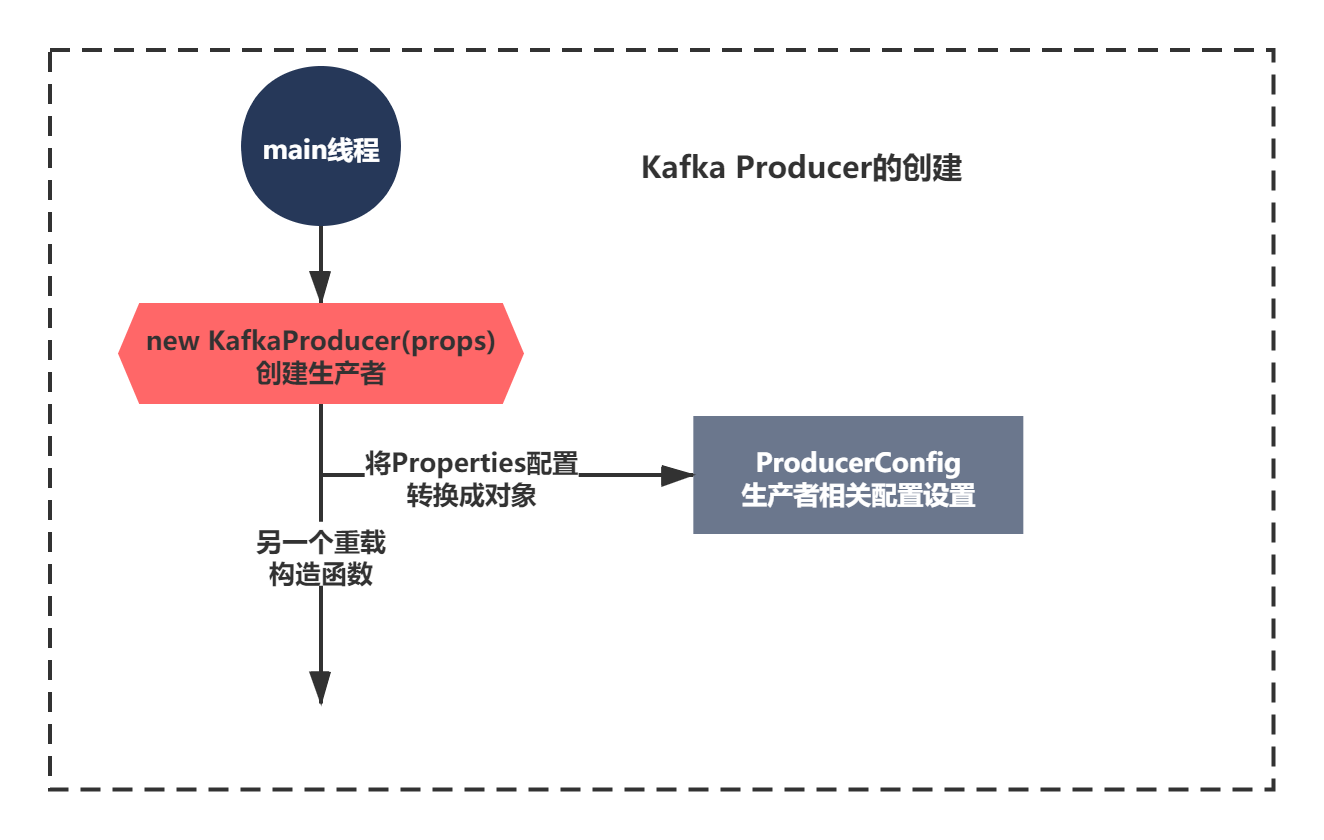

上一节我们主要从HelloWorld开始,分析了Kafka Producer的创建,重点分析了如何解析生产者配置的源码原理。

public KafkaProducer(Properties properties) {

this(new ProducerConfig(properties), null, null);

}

Kafka Producer的创建除了配置解析,还有关键的一步就是调用了一个重载的构造函数。这一节我们就来看下它主要做了什么。

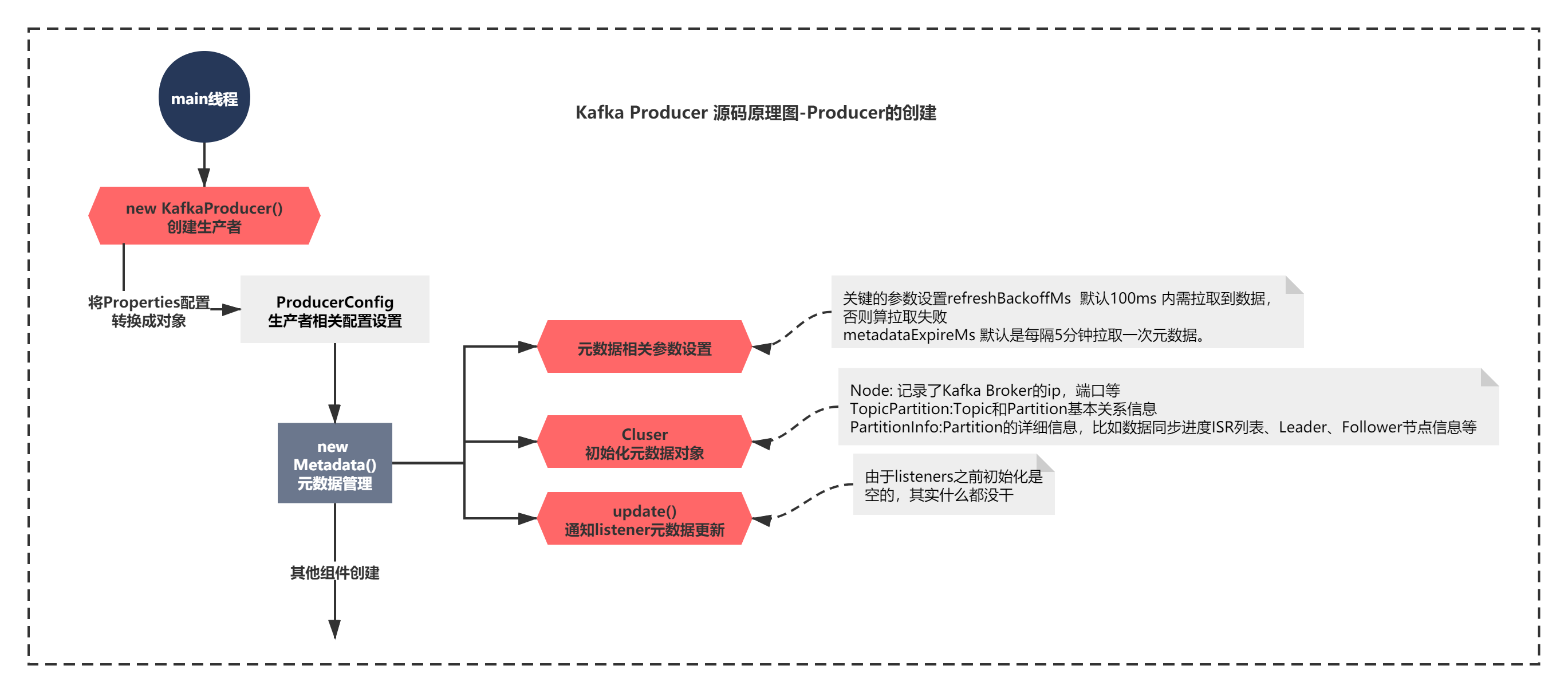

KafkaProducer初始化的哪些组件?

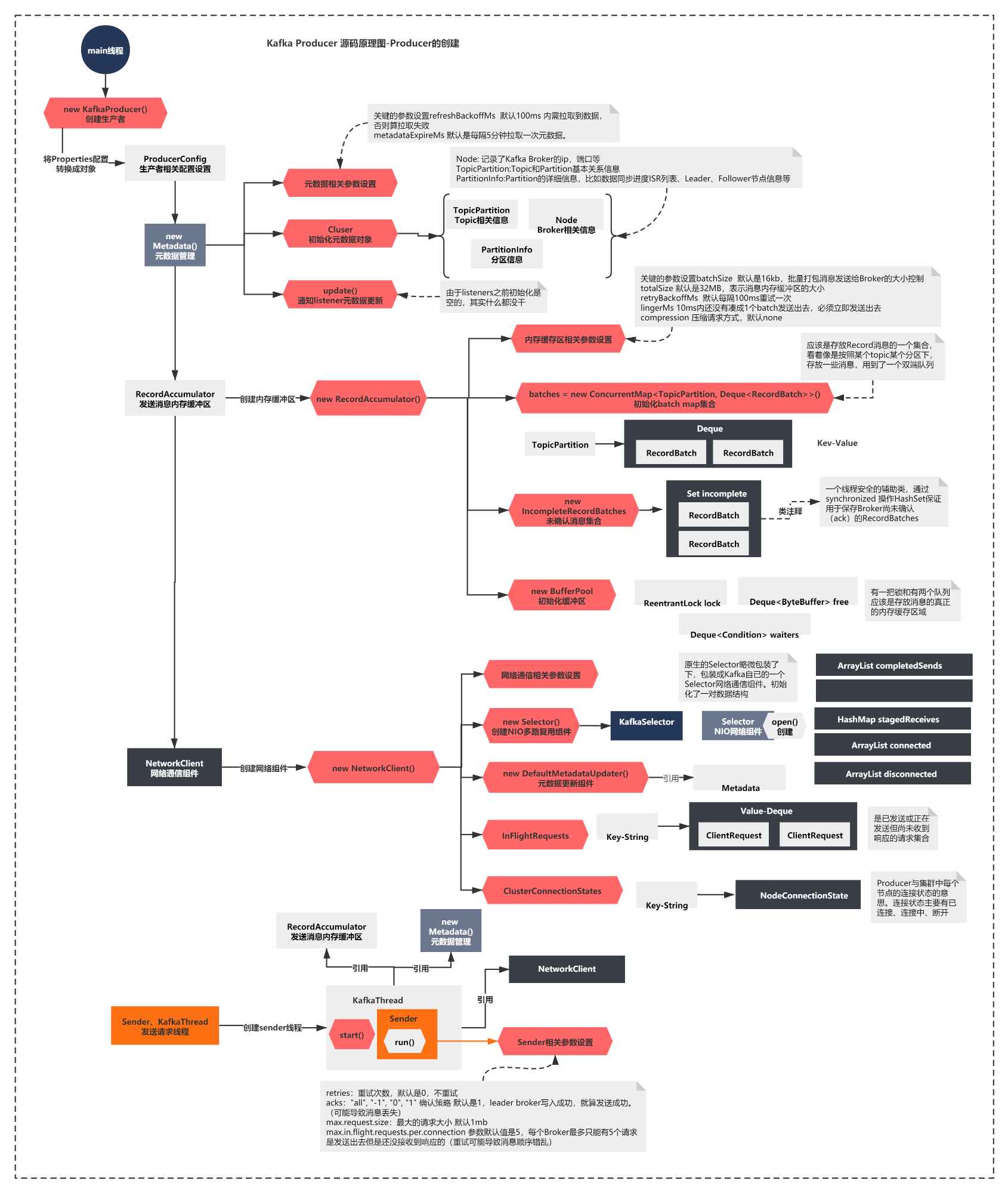

既然时一个关键组件创建,分析的构造函数,我们首要做的就是分析它的代码脉络,看看核心的组件有哪些,画一个组件图先。

让我们来看下构造函数的代码:

private KafkaProducer(ProducerConfig config, Serializer<K> keySerializer, Serializer<V> valueSerializer) {

try {

log.trace("Starting the Kafka producer");

Map<String, Object> userProvidedConfigs = config.originals();

this.producerConfig = config;

this.time = new SystemTime();

clientId = config.getString(ProducerConfig.CLIENT_ID_CONFIG);

if (clientId.length() <= 0)

clientId = "producer-" + PRODUCER_CLIENT_ID_SEQUENCE.getAndIncrement();

Map<String, String> metricTags = new LinkedHashMap<String, String>();

metricTags.put("client-id", clientId);

MetricConfig metricConfig = new MetricConfig().samples(config.getInt(ProducerConfig.METRICS_NUM_SAMPLES_CONFIG))

.timeWindow(config.getLong(ProducerConfig.METRICS_SAMPLE_WINDOW_MS_CONFIG), TimeUnit.MILLISECONDS)

.tags(metricTags);

List<MetricsReporter> reporters = config.getConfiguredInstances(ProducerConfig.METRIC_REPORTER_CLASSES_CONFIG,

MetricsReporter.class);

reporters.add(new JmxReporter(JMX_PREFIX));

this.metrics = new Metrics(metricConfig, reporters, time);

this.partitioner = config.getConfiguredInstance(ProducerConfig.PARTITIONER_CLASS_CONFIG, Partitioner.class);

long retryBackoffMs = config.getLong(ProducerConfig.RETRY_BACKOFF_MS_CONFIG);

this.metadata = new Metadata(retryBackoffMs, config.getLong(ProducerConfig.METADATA_MAX_AGE_CONFIG));

this.maxRequestSize = config.getInt(ProducerConfig.MAX_REQUEST_SIZE_CONFIG);

this.totalMemorySize = config.getLong(ProducerConfig.BUFFER_MEMORY_CONFIG);

this.compressionType = CompressionType.forName(config.getString(ProducerConfig.COMPRESSION_TYPE_CONFIG));

/* check for user defined settings.

* If the BLOCK_ON_BUFFER_FULL is set to true,we do not honor METADATA_FETCH_TIMEOUT_CONFIG.

* This should be removed with release 0.9 when the deprecated configs are removed.

*/

if (userProvidedConfigs.containsKey(ProducerConfig.BLOCK_ON_BUFFER_FULL_CONFIG)) {

log.warn(ProducerConfig.BLOCK_ON_BUFFER_FULL_CONFIG + " config is deprecated and will be removed soon. " +

"Please use " + ProducerConfig.MAX_BLOCK_MS_CONFIG);

boolean blockOnBufferFull = config.getBoolean(ProducerConfig.BLOCK_ON_BUFFER_FULL_CONFIG);

if (blockOnBufferFull) {

this.maxBlockTimeMs = Long.MAX_VALUE;

} else if (userProvidedConfigs.containsKey(ProducerConfig.METADATA_FETCH_TIMEOUT_CONFIG)) {

log.warn(ProducerConfig.METADATA_FETCH_TIMEOUT_CONFIG + " config is deprecated and will be removed soon. " +

"Please use " + ProducerConfig.MAX_BLOCK_MS_CONFIG);

this.maxBlockTimeMs = config.getLong(ProducerConfig.METADATA_FETCH_TIMEOUT_CONFIG);

} else {

this.maxBlockTimeMs = config.getLong(ProducerConfig.MAX_BLOCK_MS_CONFIG);

}

} else if (userProvidedConfigs.containsKey(ProducerConfig.METADATA_FETCH_TIMEOUT_CONFIG)) {

log.warn(ProducerConfig.METADATA_FETCH_TIMEOUT_CONFIG + " config is deprecated and will be removed soon. " +

"Please use " + ProducerConfig.MAX_BLOCK_MS_CONFIG);

this.maxBlockTimeMs = config.getLong(ProducerConfig.METADATA_FETCH_TIMEOUT_CONFIG);

} else {

this.maxBlockTimeMs = config.getLong(ProducerConfig.MAX_BLOCK_MS_CONFIG);

}

/* check for user defined settings.

* If the TIME_OUT config is set use that for request timeout.

* This should be removed with release 0.9

*/

if (userProvidedConfigs.containsKey(ProducerConfig.TIMEOUT_CONFIG)) {

log.warn(ProducerConfig.TIMEOUT_CONFIG + " config is deprecated and will be removed soon. Please use " +

ProducerConfig.REQUEST_TIMEOUT_MS_CONFIG);

this.requestTimeoutMs = config.getInt(ProducerConfig.TIMEOUT_CONFIG);

} else {

this.requestTimeoutMs = config.getInt(ProducerConfig.REQUEST_TIMEOUT_MS_CONFIG);

}

this.accumulator = new RecordAccumulator(config.getInt(ProducerConfig.BATCH_SIZE_CONFIG),

this.totalMemorySize,

this.compressionType,

config.getLong(ProducerConfig.LINGER_MS_CONFIG),

retryBackoffMs,

metrics,

time);

List<InetSocketAddress> addresses = ClientUtils.parseAndValidateAddresses(config.getList(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG));

this.metadata.update(Cluster.bootstrap(addresses), time.milliseconds());

ChannelBuilder channelBuilder = ClientUtils.createChannelBuilder(config.values());

NetworkClient client = new NetworkClient(

new Selector(config.getLong(ProducerConfig.CONNECTIONS_MAX_IDLE_MS_CONFIG), this.metrics, time, "producer", channelBuilder),

this.metadata,

clientId,

config.getInt(ProducerConfig.MAX_IN_FLIGHT_REQUESTS_PER_CONNECTION),

config.getLong(ProducerConfig.RECONNECT_BACKOFF_MS_CONFIG),

config.getInt(ProducerConfig.SEND_BUFFER_CONFIG),

config.getInt(ProducerConfig.RECEIVE_BUFFER_CONFIG),

this.requestTimeoutMs, time);

this.sender = new Sender(client,

this.metadata,

this.accumulator,

config.getInt(ProducerConfig.MAX_IN_FLIGHT_REQUESTS_PER_CONNECTION) == 1,

config.getInt(ProducerConfig.MAX_REQUEST_SIZE_CONFIG),

(short) parseAcks(config.getString(ProducerConfig.ACKS_CONFIG)),

config.getInt(ProducerConfig.RETRIES_CONFIG),

this.metrics,

new SystemTime(),

clientId,

this.requestTimeoutMs);

String ioThreadName = "kafka-producer-network-thread" + (clientId.length() > 0 ? " | " + clientId : "");

this.ioThread = new KafkaThread(ioThreadName, this.sender, true);

this.ioThread.start();

this.errors = this.metrics.sensor("errors");

if (keySerializer == null) {

this.keySerializer = config.getConfiguredInstance(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,

Serializer.class);

this.keySerializer.configure(config.originals(), true);

} else {

config.ignore(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG);

this.keySerializer = keySerializer;

}

if (valueSerializer == null) {

this.valueSerializer = config.getConfiguredInstance(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,

Serializer.class);

this.valueSerializer.configure(config.originals(), false);

} else {

config.ignore(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG);

this.valueSerializer = valueSerializer;

}

// load interceptors and make sure they get clientId

userProvidedConfigs.put(ProducerConfig.CLIENT_ID_CONFIG, clientId);

List<ProducerInterceptor<K, V>> interceptorList = (List) (new ProducerConfig(userProvidedConfigs)).getConfiguredInstances(ProducerConfig.INTERCEPTOR_CLASSES_CONFIG,

ProducerInterceptor.class);

this.interceptors = interceptorList.isEmpty() ? null : new ProducerInterceptors<>(interceptorList);

config.logUnused();

AppInfoParser.registerAppInfo(JMX_PREFIX, clientId);

log.debug("Kafka producer started");

} catch (Throwable t) {

// call close methods if internal objects are already constructed

// this is to prevent resource leak. see KAFKA-2121

close(0, TimeUnit.MILLISECONDS, true);

// now propagate the exception

throw new KafkaException("Failed to construct kafka producer", t);

}

}

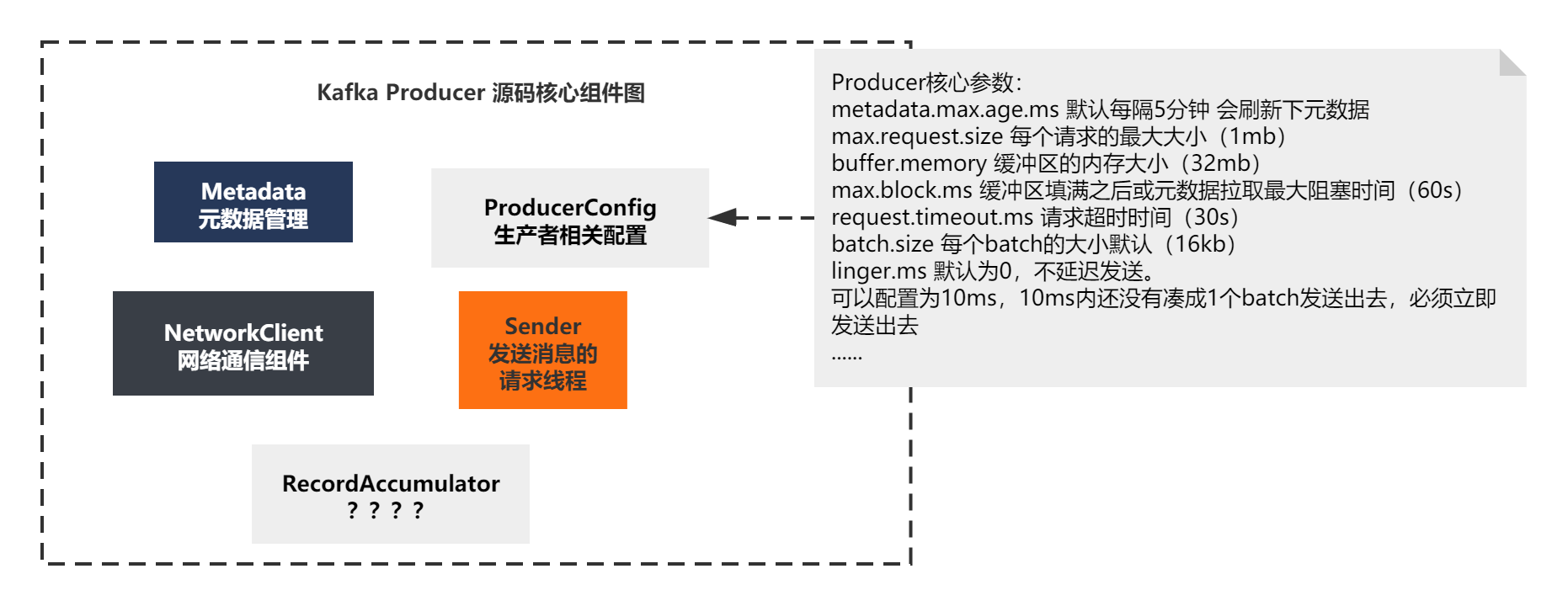

这个构造函数的代码还是比较都多的,不过没关系,先扫一下它的脉络:

1)主要是根据之前解析好的ProducerConfig对象,设置了一堆Producer的参数

2)new Metadata(),它应该算一个组件,从名字上猜测,应该是负责元数据相关的

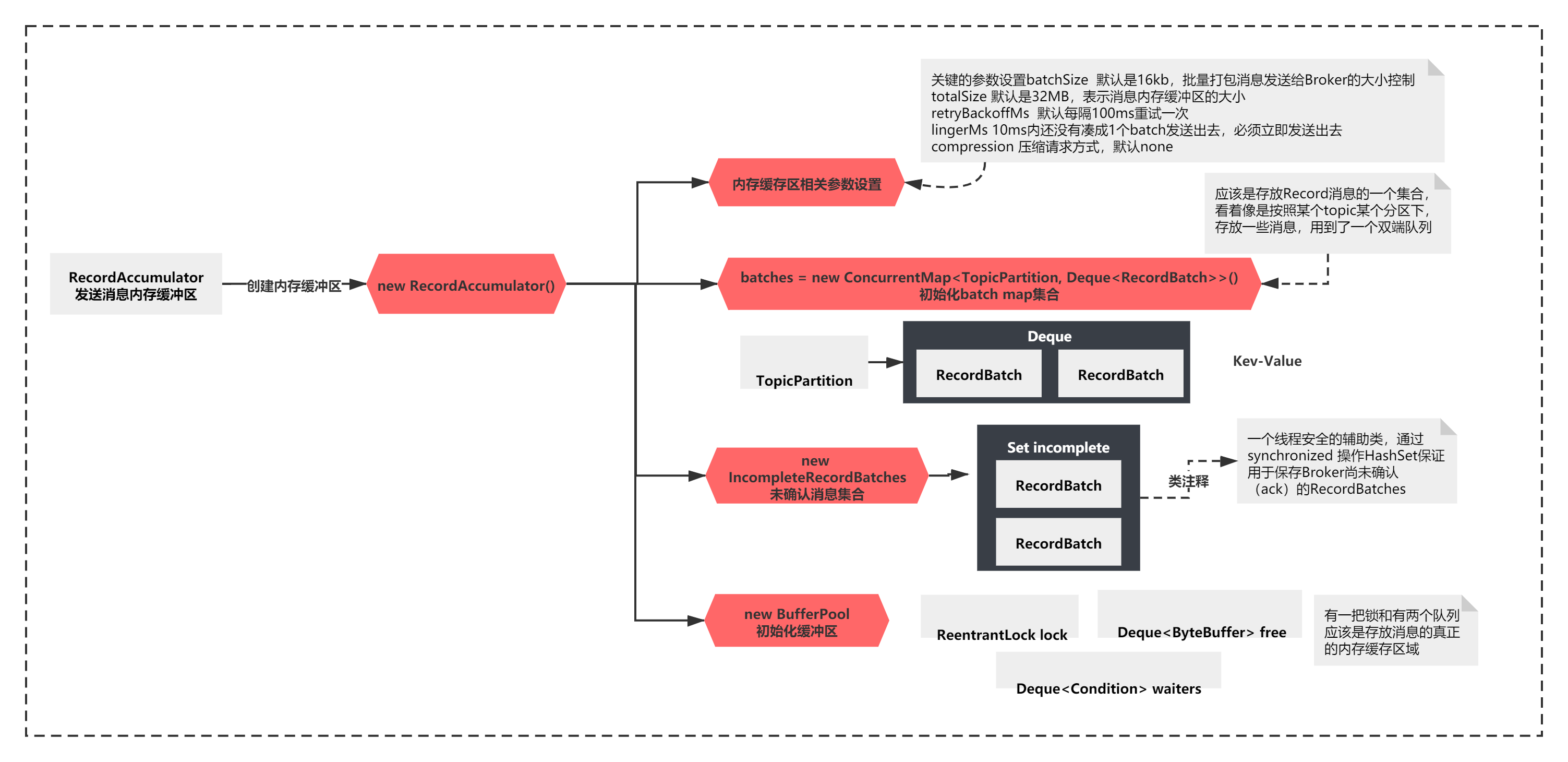

3)new RecordAccumulator()应该也是一个组件,暂时不知道是啥意思,名字是翻译下是记录累加器

4)new NetworkClient()一看就是网络通信相关的组件

5)new Sender()和 new new KafkaThread() 应该是创建了Runnable,并且使用1个线程启动。看着像是发送消息的线程

6)new ProducerInterceptors() 貌似是拦截器相关的东西

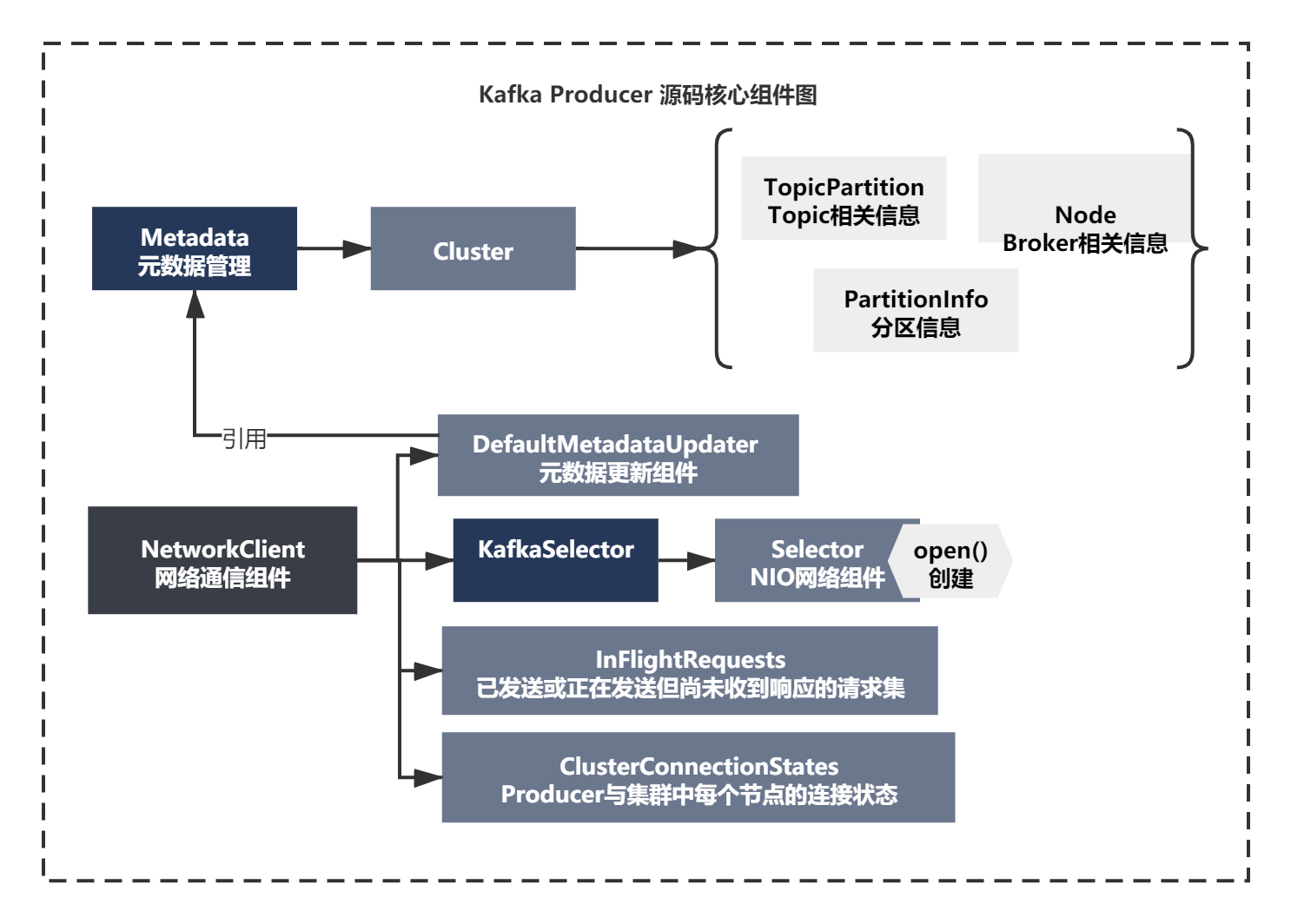

你可以看到这个构造函数,基本核心脉络就是上面6点了。我们可以画一个组件图小结下:

RecordAccumulator到底是什么?

知道了上面主要的组件主要有啥。RecordAccumulator这个类没看出来是啥意思,怎么办?看看有没有类注释。

/**

* This class acts as a queue that accumulates records into {@link org.apache.kafka.common.record.MemoryRecords}

* instances to be sent to the server.

* 这个类可以使用队列记录Records,准备待发送的数据给Server(也就是Broker)

* <p>

* The accumulator uses a bounded amount of memory and append calls will block when that memory is exhausted, unless

* this behavior is explicitly disabled.

* 当没有被禁用时,累加器由于使用了有限的内存,达到上限会阻塞。

*/

public final class RecordAccumulator {

}

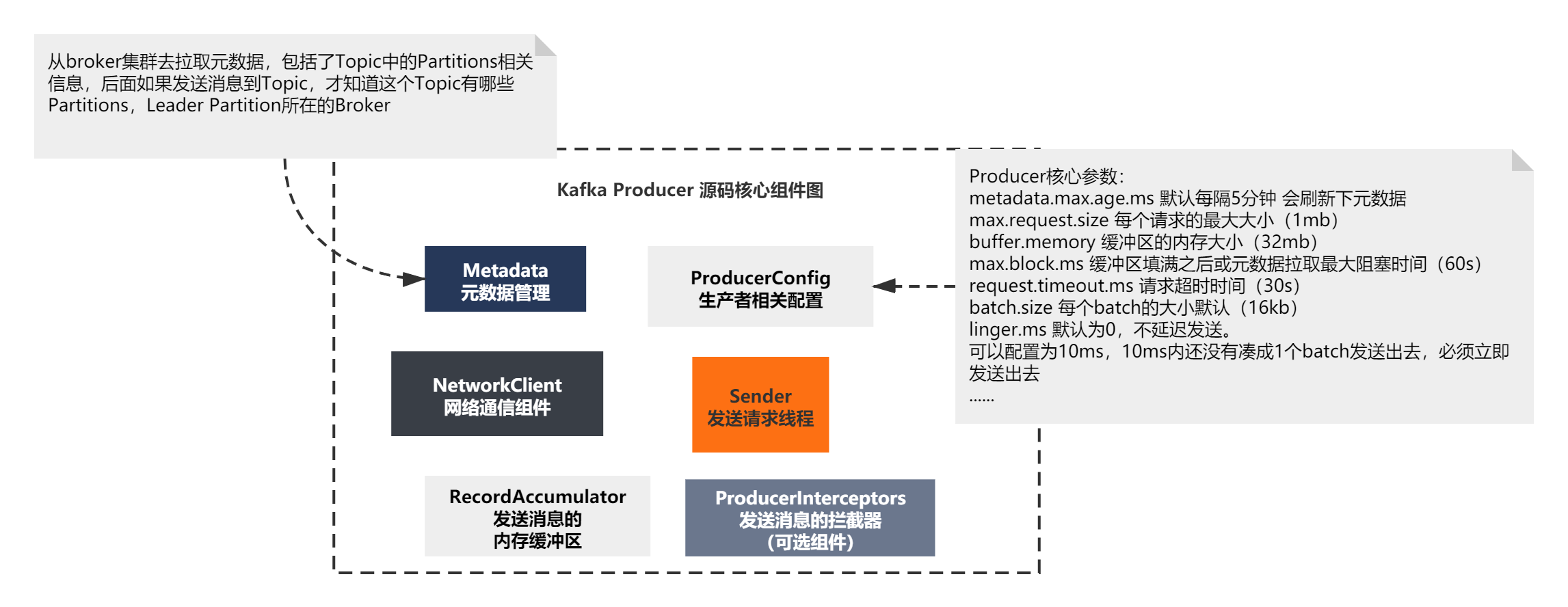

看过注释后,大体知道RecordAccumulator,它是个记录累加器,这个记录Record其实可以看做是一条消息的抽象封装,也就是它是消息累加器,通过一个内存队列缓存,做了一个缓冲,准备将这个数据发送给Broker。所以我们就可以称他为发送消息的内存缓冲器。

Metadata元数据到底是什么?

还有一个Metadata元数据这组件,有些人可能也不太清楚,元数据是指什么,元数据就是指描述数据,比如我mac或windows文件的元数据,就是它的大小,位置,创建时间,修改时间等。

那KafkaProducer生产者的元数据是指什么呢?这里就要给大家回顾一个知识了:

Kafka知识回顾Tips:Topic、Partition、Record,Leader Partition、Follower Partition、Replica是什么?

这几个是kafka管理消息涉及的基本概念。

Topic:Kafka管理消息的逻辑结构,Topic下可以有多个Partition,用作分布式存储,用来支持海量数据。

Partition:多条消息存储结构封装,对应到磁盘上的一个个log文件。kafka把消息存储到磁盘的文件通常称作log,实际就是多条消息而已。

Record:指每一条消息的抽象封装。

Broker通常有两种角色,leader和follwer,为了高可用。follower是leader的副本。

Replica:副本,leader和follower的都可以算是存放消息的一个副本,互为备份。所以replica可以指leader,也可以指follower。

回顾了这几个基本知识,来理解元数据就好多了。

要想发送消息给Broker,起码得知道发送到哪里去。所以就需要描述信息,这些描述信息就是发送消息需要的元数据。

Producer一般都需要从broker集群去拉取元数据,包括了Topic中的Partitions信息,后面如果发送消息到Topic,才知道这个Topic有哪些Partitions,哪些是Leader Partition所在的Broker。

组件图最终如下所示:

Producer核心组件—元数据Metadata剖析

既然我们知道了Producer主要初始化了上面的一些组件,那么只要搞懂上面每个组件做了什么,基本Producer的很多原理就能理解透彻了。

我们先来看下Metadata这个元数据组件做了什么。

首先Metadata的创建很简单,如下:

/**

* Create a new Metadata instance

* @param refreshBackoffMs The minimum amount of time that must expire between metadata refreshes to avoid busy

* polling

* 元数据刷新之间必须终止的最短时间,以避免繁忙的轮询

* @param metadataExpireMs The maximum amount of time that metadata can be retained without refresh

* 不刷新即可保留元数据的最长时间

*/

public Metadata(long refreshBackoffMs, long metadataExpireMs) {

this.refreshBackoffMs = refreshBackoffMs;

this.metadataExpireMs = metadataExpireMs;

this.lastRefreshMs = 0L;

this.lastSuccessfulRefreshMs = 0L;

this.version = 0;

this.cluster = Cluster.empty();

this.needUpdate = false;

this.topics = new HashSet<String>();

this.listeners = new ArrayList<>();

this.needMetadataForAllTopics = false;

}

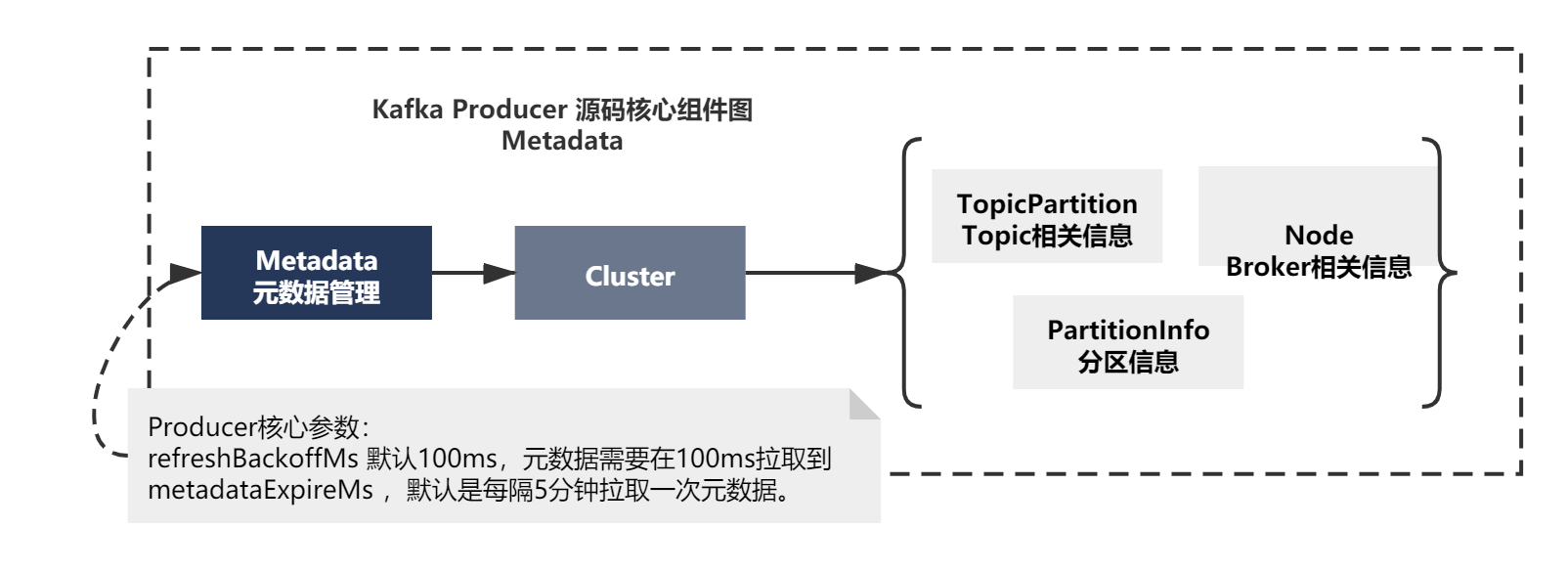

这个构造函数,从注释就说明了,这个元数据对象Metadata会被定时刷新,也就是说,它应该会定时的从Broker拉取核心的元数据到Producer。

而它的脉络就是

1)初始化了一些配置 ,根据名字和注释基本都能从猜测出来含义

默认值就是在之前ConfigDef静态变量初始化可以看到。

refreshBackoffMs 元数据刷新之间必须终止的最短时间,以避免繁忙的轮询,默认100ms

metadataExpireMs ,默认是每隔5分钟拉取一次元数据。

lastRefreshMs 最近拉取元数据的时间戳

lastSuccessfulRefreshMs 最近拉取元数据成功的时间戳

version 元数据拉取的版本

Cluster 这个比较关键,是元数据信息的对象封装

needUpdate 是否需要拉取标识

topics 记录topic信息的集合

listeners 元数据变更的监听回调

needMetadataForAllTopics 默认是一个false,暂时不知道是做什么的

2)初始化Cluster元数据对象

上面变量中,元数据最终封装存放在了Cluster对象中。可以看下它会放了什么数据:

/**

* A representation of a subset of the nodes, topics, and partitions in the Kafka cluster.

*/

public final class Cluster {

private final boolean isBootstrapConfigured;

//Kafka Broker节点

private final List<Node> nodes;

//没有被授权访问的Topic的列表

private final Set<String> unauthorizedTopics;

//TopicPartition:Topic和Partition基本关系信息

//PartitionInfo:Partition的详细信息,比如数据同步进度ISR列表、Leader、Follower节点信息等

private final Map<TopicPartition, PartitionInfo> partitionsByTopicPartition;

//每个topic有哪些分区

private final Map<String, List<PartitionInfo>> partitionsByTopic;

//每个topic有哪些当前可用的分区,如果某个分区没有leader是存活的,此时那个分区就不可用了

private final Map<String, List<PartitionInfo>> availablePartitionsByTopic;

//每个broker上放了哪些分区

private final Map<Integer, List<PartitionInfo>> partitionsByNode;

//broker.id -> Node

private final Map<Integer, Node> nodesById;

//省略初始化方法

}

主要就是组成了整个Kafka集群信息,比如

Node:记录了Kafka Broker的ip,端口等

TopicPartition:Topic和Partition基本关系信息

PartitionInfo:Partition的详细信息,比如数据同步进度ISR列表、Leader、Follower节点信息等

其他的上面我也用注释基本都标注了他们的大致意思了。大家大体有一个印象就行,其实只要知道都是topic的元数据就行了。

上面的信息你如果问我是怎么知道的,很简单,我debug了下,当后面拉取到元数据后,你可以看下数据,就明白了。debug看源码的方法在这个场景就比较适合,我们目前也没有下载源码,导入源码,只需要写一个helloWorld,通过maven自动下载jar包的源码,进行debug就可以分析客户端的源码。

之前我提到的源码阅读方法和思想,大家一定要活学活用。

所以元数据对象主要就是如下所示:

KafkaProducer创建Metadata其实并没有多么复杂, 创建了之后做了什么呢?KafkaProducer的构造函数,执行了一个metadata.update方法。

private KafkaProducer(ProducerConfig config, Serializer<K> keySerializer, Serializer<V> valueSerializer) {

// 一些参数设置,省略...

this.metadata = new Metadata(retryBackoffMs, config.getLong(ProducerConfig.METADATA_MAX_AGE_CONFIG));

// RecordAccumulator、NetworkClient、Sender等组件的初始化,省略...

this.metadata.update(Cluster.bootstrap(addresses), time.milliseconds());

//省略...

}

这个难道就在进行元数据拉取么?我们来看下这个update方法:

/**

* Update the cluster metadata

*/

public synchronized void update(Cluster cluster, long now) {

this.needUpdate = false;

this.lastRefreshMs = now;

this.lastSuccessfulRefreshMs = now;

this.version += 1;

for (Listener listener: listeners)

listener.onMetadataUpdate(cluster);

// Do this after notifying listeners as subscribed topics' list can be changed by listeners

this.cluster = this.needMetadataForAllTopics ? getClusterForCurrentTopics(cluster) : cluster;

notifyAll();

log.debug("Updated cluster metadata version {} to {}", this.version, this.cluster);

}

由于listeners之前初始化是空的,这个needMetadataForAllTopics参数也是false,之后直接调用了Metadata.notifyAll(),其实什么都没干。没有什么元数据拉取或者更新的操作。

最终发现,这个方法说明其实几乎什么都没有做,也就是说KafkaProducer创建的时候,没有进行元数据拉取。只是初始化了一个Metadata对象,其中元数据对象Cluster的信息默认是空的。

Metadata的整个过程的关键,如下图所示:

到这里,你会发现阅读源码的时候,不是什么时候都是一帆风顺的,会被各种分支和代码搞得晕头转向。像上面的update()方法,就会迷惑你。

但此时你不要灰心,一定要缕清核心脉络思路,多画图,先记录关键逻辑,把这里放一放,可以尝试继续分析其他的场景和逻辑。当分析的逻辑和场景足够多的时候,多重复分析几次。你就会慢慢悟到之前不懂的逻辑,会串起来所有的逻辑的。

Producer核心组件—RecordAccumulator剖析

仔细分析过了元数据组件的创建之后,我们接着看下一个组件RecordAccumulator消息内存缓冲器。

之前通过注释我们大体知道RecordAccumulator,它是个记录累加器,这个记录Record其实可以看做是一条消息的抽象封装,也就是它是消息累加器,通过一个内存队列缓存,做了一个缓冲,准备将这个数据发送给Broker。所以我们就可以称他为发送消息的内存缓冲器。

创建它的代码主要如下:

/**

* Create a new record accumulator

*

* @param batchSize The size to use when allocating {@link org.apache.kafka.common.record.MemoryRecords} instances

* @param totalSize The maximum memory the record accumulator can use.

* @param compression The compression codec for the records

* @param lingerMs An artificial delay time to add before declaring a records instance that isn't full ready for

* sending. This allows time for more records to arrive. Setting a non-zero lingerMs will trade off some

* latency for potentially better throughput due to more batching (and hence fewer, larger requests).

* @param retryBackoffMs An artificial delay time to retry the produce request upon receiving an error. This avoids

* exhausting all retries in a short period of time.

* @param metrics The metrics

* @param time The time instance to use

*/

public RecordAccumulator(int batchSize,

long totalSize,

CompressionType compression,

long lingerMs,

long retryBackoffMs,

Metrics metrics,

Time time) {

this.drainIndex = 0;

this.closed = false;

this.flushesInProgress = new AtomicInteger(0);

this.appendsInProgress = new AtomicInteger(0);

this.batchSize = batchSize;

this.compression = compression;

this.lingerMs = lingerMs;

this.retryBackoffMs = retryBackoffMs;

this.batches = new CopyOnWriteMap<>();

String metricGrpName = "producer-metrics";

this.free = new BufferPool(totalSize, batchSize, metrics, time, metricGrpName);

this.incomplete = new IncompleteRecordBatches();

this.muted = new HashSet<>();

this.time = time;

registerMetrics(metrics, metricGrpName);

}

这个方法的脉络其实注释已经告诉我们了,主要就是:

1)设置了一些参数 batchSize、totalSize、retryBackoffMs、lingerMs、compression等

2)初始化了一些数据结构,比如batches是一个 new CopyOnWriteMap<>()

3)初始化了BufferPool和IncompleteRecordBatches

1)设置了一些参数 batchSize、totalSize、retryBackoffMs、lingerMs、compression等

首先是设置了一些参数 ,从上一节ConfigDef初始化可以看到默认值和基本作用

batchSize 默认是16kb,批量打包消息发送给Broker的大小控制

totalSize 默认是32MB,表示消息内存缓冲区的大小

retryBackoffMs 默认每隔100ms重试一次

lingerMs 10ms内还没有凑成1个batch发送出去,必须立即发送出去

compression 压缩请求方式,默认none

2)初始化了一些数据结构,比如batches是一个 new CopyOnWriteMap<>()

应该是存放Record消息的一个集合,看着像是按照某个topic某个分区下,存放一些消息,用到了一个双端队列

batches = new ConcurrentMap<TopicPartition, Deque<RecordBatch>>()

3)初始化了BufferPool和IncompleteRecordBatches

IncompleteRecordBatches的创建比较简单。如下:

/*

* A threadsafe helper class to hold RecordBatches that haven't been ack'd yet

*/

private final static class IncompleteRecordBatches {

private final Set<RecordBatch> incomplete;

public IncompleteRecordBatches() {

this.incomplete = new HashSet<RecordBatch>();

}

public void add(RecordBatch batch) {

synchronized (incomplete) {

this.incomplete.add(batch);

}

}

public void remove(RecordBatch batch) {

synchronized (incomplete) {

boolean removed = this.incomplete.remove(batch);

if (!removed)

throw new IllegalStateException("Remove from the incomplete set failed. This should be impossible.");

}

}

public Iterable<RecordBatch> all() {

synchronized (incomplete) {

return new ArrayList<>(this.incomplete);

}

}

}

注释可以看出来,它是一个线程安全的辅助类,通过synchronized 操作HashSet保证用于保存Broker尚未确认(ack)的RecordBatches。

而new BufferPool初始化缓冲区,代码如下:

*/

public final class BufferPool {

private final long totalMemory;

private final int poolableSize;

private final ReentrantLock lock;

private final Deque<ByteBuffer> free;

private final Deque<Condition> waiters;

private long availableMemory;

private final Metrics metrics;

private final Time time;

private final Sensor waitTime;

/**

* Create a new buffer pool

*

* @param memory The maximum amount of memory that this buffer pool can allocate

* @param poolableSize The buffer size to cache in the free list rather than deallocating

* @param metrics instance of Metrics

* @param time time instance

* @param metricGrpName logical group name for metrics

*/

public BufferPool(long memory, int poolableSize, Metrics metrics, Time time, String metricGrpName) {

this.poolableSize = poolableSize;

this.lock = new ReentrantLock();

this.free = new ArrayDeque<ByteBuffer>();

this.waiters = new ArrayDeque<Condition>();

this.totalMemory = memory;

this.availableMemory = memory;

this.metrics = metrics;

this.time = time;

this.waitTime = this.metrics.sensor("bufferpool-wait-time");

MetricName metricName = metrics.metricName("bufferpool-wait-ratio",

metricGrpName,

"The fraction of time an appender waits for space allocation.");

this.waitTime.add(metricName, new Rate(TimeUnit.NANOSECONDS));

}

主要是有一把锁和有两个队列,应该是存放消息的真正的内存缓存区域。

整个过程如下所示:

你看过这些的组件的内部结构,其实可能并不知道它们到底是干嘛的,没关系,这里我们主要的目的本来就是初步就是对这些组件有个印象就可以了,之后分析某个组件的行为和作用的时候,才能更好的理解。

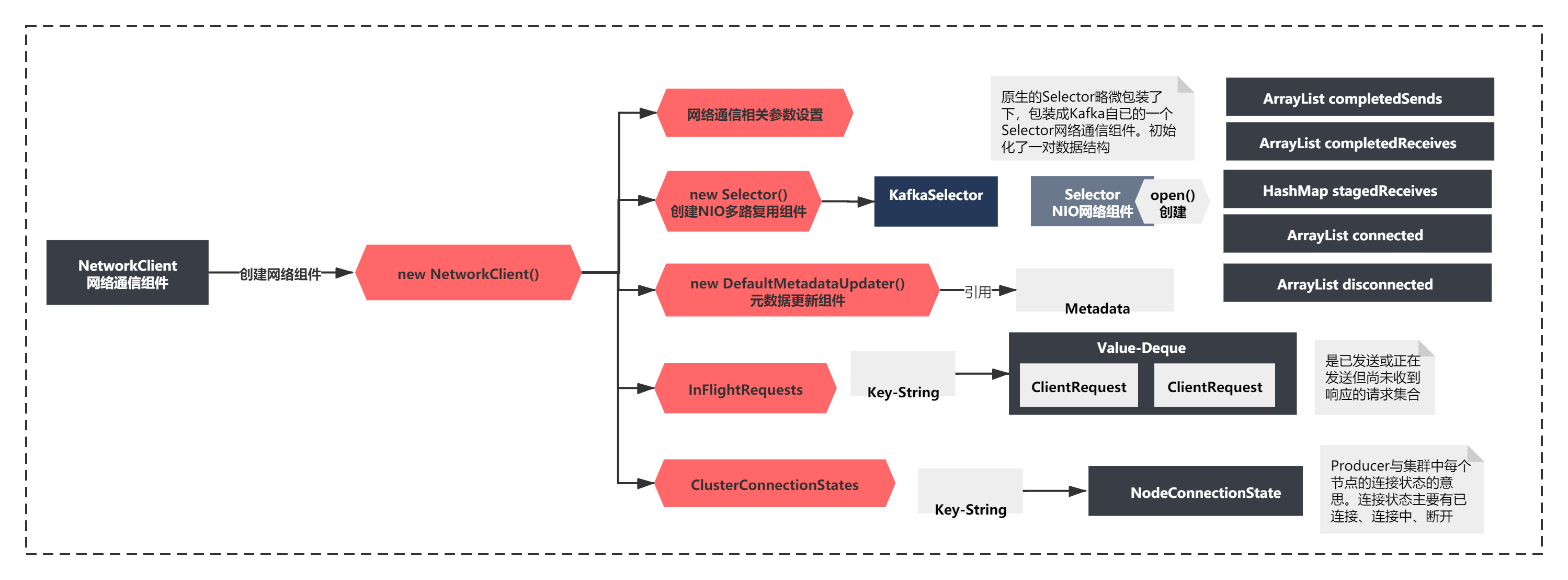

Producer核心组件—NetworkClient剖析

如果要拉去元数据或者发送消息,首先肯定要和Broker建立连接。之前分析KafkaProducer的源码脉络时,有一个网络通信组件NetworkClient,我们可以分析下这个组件怎么创建,做了哪些事情。看看元数据拉取会不会在这里呢?

private KafkaProducer(ProducerConfig config, Serializer<K> keySerializer, Serializer<V> valueSerializer) {

// 一些参数设置,省略...

// RecordAccumulator、Metadata、Sender等组件的初始化,省略...

NetworkClient client = new NetworkClient(

// Kafka将原生的Selector略微包装了下,包装成Kafka自已的一个Selector网络通信组件

new Selector(config.getLong(ProducerConfig.CONNECTIONS_MAX_IDLE_MS_CONFIG), this.metrics, time, "producer", channelBuilder),

this.metadata,

clientId,

config.getInt(ProducerConfig.MAX_IN_FLIGHT_REQUESTS_PER_CONNECTION),

config.getLong(ProducerConfig.RECONNECT_BACKOFF_MS_CONFIG),

config.getInt(ProducerConfig.SEND_BUFFER_CONFIG),

config.getInt(ProducerConfig.RECEIVE_BUFFER_CONFIG),

this.requestTimeoutMs, time);

//省略...

}

private NetworkClient(MetadataUpdater metadataUpdater,

Metadata metadata,

Selectable selector,

String clientId,

int maxInFlightRequestsPerConnection,

long reconnectBackoffMs,

int socketSendBuffer,

int socketReceiveBuffer,

int requestTimeoutMs,

Time time) {

/* It would be better if we could pass `DefaultMetadataUpdater` from the public constructor, but it's not

* possible because `DefaultMetadataUpdater` is an inner class and it can only be instantiated after the

* super constructor is invoked.

*/

if (metadataUpdater == null) {

if (metadata == null)

throw new IllegalArgumentException("`metadata` must not be null");

//更新元数据的一个组件?

this.metadataUpdater = new DefaultMetadataUpdater(metadata);

} else {

this.metadataUpdater = metadataUpdater;

}

this.selector = selector;

this.clientId = clientId;

// 已发送或正在发送但尚未收到响应的请求集

this.inFlightRequests = new InFlightRequests(maxInFlightRequestsPerConnection);

// Producer与集群中每个节点的连接状态

this.connectionStates = new ClusterConnectionStates(reconnectBackoffMs);

this.socketSendBuffer = socketSendBuffer;

this.socketReceiveBuffer = socketReceiveBuffer;

this.correlation = 0;

this.randOffset = new Random();

this.requestTimeoutMs = requestTimeoutMs;

this.time = time;

}

上面的NetworkClient 创建,主要是

1)创建了一个Selector,Selector这个名称,如果你熟悉Java NIO的API的话,应该不会陌生,它是NIO三大组件之一Selector、Buffer、Channel。Kafka将原生的Selector略微包装了下,包装成Kafka自已的一个Selector网络通信组件。

这里我不展开将NIO的原理,Selector这个组件,你可以简单的理解为是用来监听网络连接是否有建立和读写请求的。

2)设置了一堆配置参数。

3)创建了一个DefaultMetadataUpdater组件,将metadata传递给了它。从名字连蒙带猜下,好像是更新元数据的一个组件。难道找到元数据拉取的逻辑了?一会可以重点关注下这个类的使用

4)创建了InFlightRequests和ClusterConnectionStates 从这两个类的注释我们可以看出来,InFlightRequests是已发送或正在发送但尚未收到响应的请求集,ClusterConnectionStates 是Producer与集群中每个节点的连接状态。**

上面的NetworkClient的初始化,整个过程可以总结如下图:

看过了创建的脉络,下面我们看下细节(先脉络后细节的思想),上面的信息如果你不是一下在就能看出来的话,你就需要看下每个类的细节,确认下了。

细节1:首先是创建Selector代码如下:

public Selector(long connectionMaxIdleMS, Metrics metrics, Time time, String metricGrpPrefix, ChannelBuilder channelBuilder) {

this(NetworkReceive.UNLIMITED, connectionMaxIdleMS, metrics, time, metricGrpPrefix, new HashMap<String, String>(), true, channelBuilder);

}

public Selector(int maxReceiveSize, long connectionMaxIdleMs, Metrics metrics, Time time, String metricGrpPrefix, Map<String, String> metricTags, boolean metricsPerConnection, ChannelBuilder channelBuilder) {

try {

//本质还是创建了一个NIO的Selector

this.nioSelector = java.nio.channels.Selector.open();

} catch (IOException e) {

throw new KafkaException(e);

}

this.maxReceiveSize = maxReceiveSize;

this.connectionsMaxIdleNanos = connectionMaxIdleMs * 1000 * 1000;

this.time = time;

this.metricGrpPrefix = metricGrpPrefix;

this.metricTags = metricTags;

this.channels = new HashMap<>();

this.completedSends = new ArrayList<>();

this.completedReceives = new ArrayList<>();

this.stagedReceives = new HashMap<>();

this.immediatelyConnectedKeys = new HashSet<>();

this.connected = new ArrayList<>();

this.disconnected = new ArrayList<>();

this.failedSends = new ArrayList<>();

this.sensors = new SelectorMetrics(metrics);

this.channelBuilder = channelBuilder;

// initial capacity and load factor are default, we set them explicitly because we want to set accessOrder = true

this.lruConnections = new LinkedHashMap<>(16, .75F, true);

currentTimeNanos = time.nanoseconds();

nextIdleCloseCheckTime = currentTimeNanos + connectionsMaxIdleNanos;

this.metricsPerConnection = metricsPerConnection;

}

可以看到,创建Kafka的Selector本质还是创建了一个NIO的Selector:java.nio.channels.Selector.open();

细节2:DefaultMetadataUpdater这个类的初始化,什么都没做,就是引用了下Metadata

class DefaultMetadataUpdater implements MetadataUpdater {

//引用了下Metadata

/* the current cluster metadata */

private final Metadata metadata;

/* true iff there is a metadata request that has been sent and for which we have not yet received a response */

private boolean metadataFetchInProgress;

/* the last timestamp when no broker node is available to connect */

private long lastNoNodeAvailableMs;

DefaultMetadataUpdater(Metadata metadata) {

this.metadata = metadata;

this.metadataFetchInProgress = false;

this.lastNoNodeAvailableMs = 0;

}

细节3:InFlightRequests的注释的确是已发送或正在发送但尚未收到响应的请求集的意思。不理解也没关系,后面我们会看到它使用的地方的。

/**

* The set of requests which have been sent or are being sent but haven't yet received a response

* 已发送或正在发送但尚未收到响应的请求集的意思

*/

final class InFlightRequests {

private final int maxInFlightRequestsPerConnection;

private final Map<String, Deque<ClientRequest>> requests = new HashMap<String, Deque<ClientRequest>>();

public InFlightRequests(int maxInFlightRequestsPerConnection) {

this.maxInFlightRequestsPerConnection = maxInFlightRequestsPerConnection;

}

}

细节4:ClusterConnectionStates这个类注释也就是Producer与集群中每个节点的连接状态的意思。连接状态主要有已连接、连接中、断开。

/**

* The state of our connection to each node in the cluster.

* Producer与集群中每个节点的连接状态的意思

*

*/

final class ClusterConnectionStates {

private final long reconnectBackoffMs;

private final Map<String, NodeConnectionState> nodeState;

public ClusterConnectionStates(long reconnectBackoffMs) {

this.reconnectBackoffMs = reconnectBackoffMs;

this.nodeState = new HashMap<String, NodeConnectionState>();

}

/**

* The state of our connection to a node

*/

private static class NodeConnectionState {

ConnectionState state;

long lastConnectAttemptMs;

public NodeConnectionState(ConnectionState state, long lastConnectAttempt) {

this.state = state;

this.lastConnectAttemptMs = lastConnectAttempt;

}

public String toString() {

return "NodeState(" + state + ", " + lastConnectAttemptMs + ")";

}

}

/**

* The states of a node connection

* 连接状态主要有已连接、连接中、断开

*/

public enum ConnectionState {

DISCONNECTED, CONNECTING, CONNECTED

}

上面整个NeworkClient的初始化,就完成了。至于网络组件的相关参数这里先不做解释,当使用到的时候我再给大家解释。目前解释了大家可能也太能理解。

整个细节,我大致整理如下图:

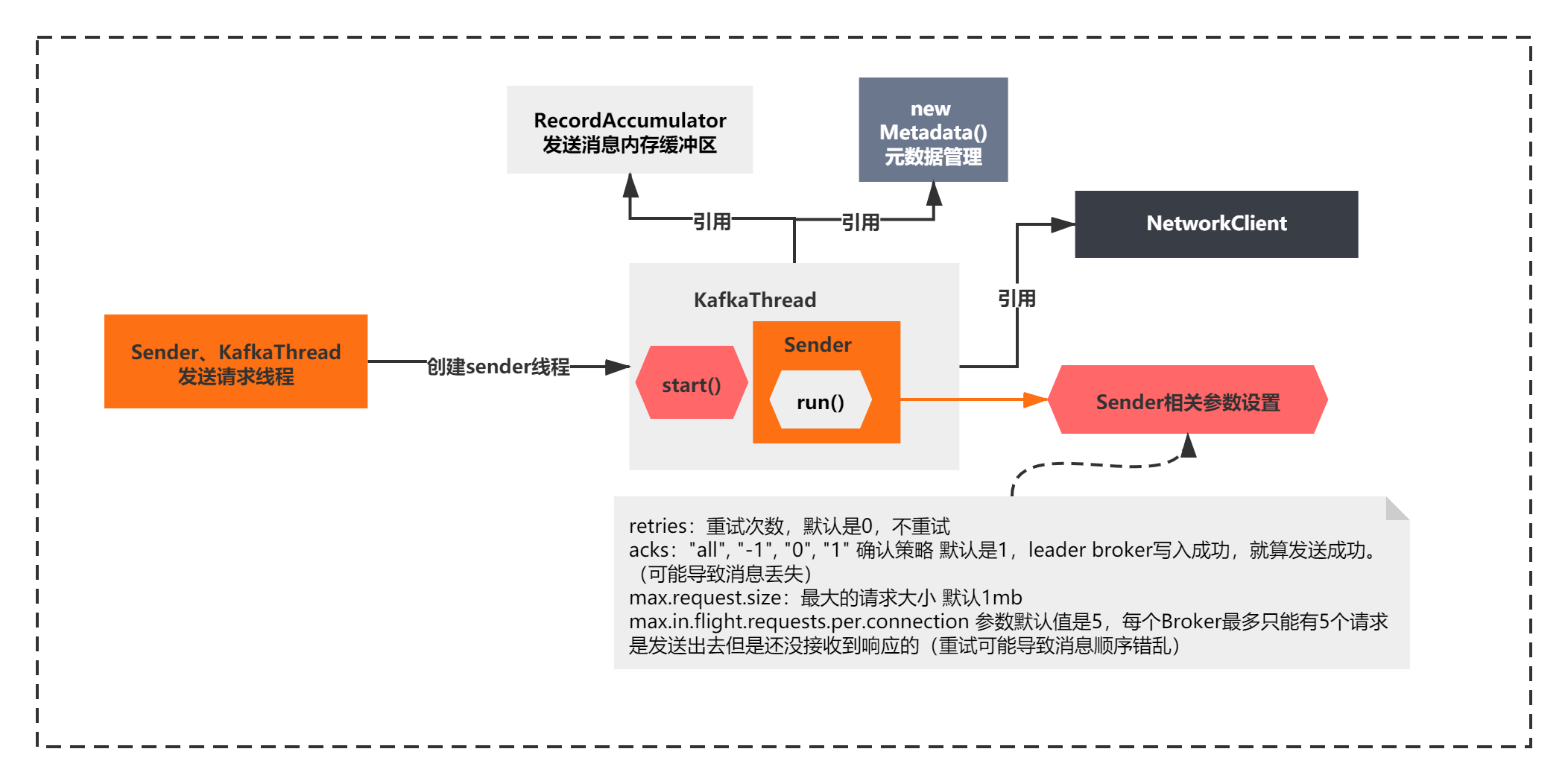

Producer核心组件—Sender线程剖析

网络组件NeworkClient和元数据Metadata、RecordAccumulator发送消息的内存缓冲器,我们都剖析了下它们的初始化过程。主要知道它们初始化了那些东西。我们总结了组件图,记录了关键信息。我们可以继续往下分析最后一个核心的组件Send线程。我们来看看它搞了哪些事情。

Sender的初始化逻辑如下所示:

private KafkaProducer(ProducerConfig config, Serializer<K> keySerializer, Serializer<V> valueSerializer) {

// 一些参数设置,省略...

// RecordAccumulator、NetworkClient、Metadata等组件的初始化,省略...

this.sender = new Sender(client,

this.metadata,

this.accumulator,

config.getInt(ProducerConfig.MAX_IN_FLIGHT_REQUESTS_PER_CONNECTION) == 1,

config.getInt(ProducerConfig.MAX_REQUEST_SIZE_CONFIG),

(short) parseAcks(config.getString(ProducerConfig.ACKS_CONFIG)),

config.getInt(ProducerConfig.RETRIES_CONFIG),

this.metrics,

new SystemTime(),

clientId,

this.requestTimeoutMs);

String ioThreadName = "kafka-producer-network-thread" + (clientId.length() > 0 ? " | " + clientId : "");

this.ioThread = new KafkaThread(ioThreadName, this.sender, true);

this.ioThread.start();

// 省略...

}

public Sender(KafkaClient client,

Metadata metadata,

RecordAccumulator accumulator,

boolean guaranteeMessageOrder,

int maxRequestSize,

short acks,

int retries,

Metrics metrics,

Time time,

String clientId,

int requestTimeout) {

this.client = client;

this.accumulator = accumulator;

this.metadata = metadata;

this.guaranteeMessageOrder = guaranteeMessageOrder;

this.maxRequestSize = maxRequestSize;

this.running = true;

this.acks = acks;

this.retries = retries;

this.time = time;

this.clientId = clientId;

this.sensors = new SenderMetrics(metrics);

this.requestTimeout = requestTimeout;

}

public KafkaThread(final String name, Runnable runnable, boolean daemon) {

super(runnable, name);

setDaemon(daemon);

setUncaughtExceptionHandler(new Thread.UncaughtExceptionHandler() {

public void uncaughtException(Thread t, Throwable e) {

log.error("Uncaught exception in " + name + ": ", e);

}

});

}

这个初始化核心脉络很简单,主要就是将其他组件交给了Sender去使用。

1) 设置了sender的一些核心参数

retries:重试次数,默认是0,不重试

acks:“all”, “-1”, “0”, “1” 确认策略 默认是1,leader broker写入成功,就算发送成功。 (可能导致消息丢失)

max.request.size:最大的请求大小 默认1mb

max.in.flight.requests.per.connection 参数默认值是5,每个Broker最多只能有5个请求是发送出去但是还没接收到响应的(重试可能导致消息顺序错乱)

2)引用了其他三个关键组件:网络组件NeworkClient和元数据Metadata、RecordAccumulator发送消息的内存缓冲器

3)之后通过KafkaThread包装了Runnable线程,启动了线程,开始执行Sender的run方法了

整个过程如下所示:

run方法的执行,不是这一节我们主要关心的了。我后面几节会详细分析的。

小结

最后我们小结下吧,今天我们主要熟悉了如下的内容:

KafkaProducer初始化的哪些组件

Producer核心组件—RecordAccumulator剖析

Producer核心组件—元数据Metadata剖析

Producer核心组件—NetworkClient剖析

Producer核心组件—Sender线程剖析

我们只是基本认识了下,每个组件是什么,主要干什么,内部主要有些什么东西。我把图今天熟悉的只是,给大家汇总一了一张大图:

有了这张图,后面几节我们就来重点开始分析Kafka Prodcuer核心流程就容易很多了。比如

元数据拉取机制wait+notifyAll的灵活使用、发送消息的路由策略

网络通信机制,基于原生NIO发送消息机制+粘包拆包问题的巧妙解决

Producer的高吞吐:内存缓冲区的双端队列+批量打包Batch发送机制

大家敬请期待吧,好了今天就到这里,我们下节见!

-

RocketMQ入门指南:搭建与使用全流程详解11-26

-

RocketMQ入门教程:轻松搭建与使用指南11-26

-

手写RocketMQ:从入门到实践的简单教程11-26

-

【机器学习(二)】分类和回归任务-决策树(Decision Tree,DT)算法-Sentosa_DSML社区版11-25

-

增量更新怎么做?-icode9专业技术文章分享11-23

-

压缩包加密方案有哪些?-icode9专业技术文章分享11-23

-

用shell怎么写一个开机时自动同步远程仓库的代码?-icode9专业技术文章分享11-23

-

webman可以同步自己的仓库吗?-icode9专业技术文章分享11-23

-

在 Webman 中怎么判断是否有某命令进程正在运行?-icode9专业技术文章分享11-23

-

如何重置new Swiper?-icode9专业技术文章分享11-23

-

oss直传有什么好处?-icode9专业技术文章分享11-23

-

如何将oss直传封装成一个组件在其他页面调用时都可以使用?-icode9专业技术文章分享11-23

-

怎么使用laravel 11在代码里获取路由列表?-icode9专业技术文章分享11-23

-

怎么实现ansible playbook 备份代码中命名包含时间戳功能?-icode9专业技术文章分享11-22

-

ansible 的archive 参数是什么意思?-icode9专业技术文章分享11-22