C/C++教程

【PyTorch】n卡驱动、CUDA Toolkit、cuDNN全解安装教程

@TOC

GPU、NVIDIA Graphics Drivers、CUDA、CUDA Toolkit和cuDNN的关系

- GPU:物理显卡。

- NVIDIA Graphics Drivers:物理显卡驱动。

- CUDA:一种由NVIDIA推出的通用并行计算架构,是一种并行计算平台和编程模型,该架构使GPU能够解决复杂的计算问题。在安装NVIDIA Graphics Drivers时,CUDA已经捆绑安装,无需另外安装。

- CUDA Toolkit:包含了CUDA的runtime API、CUDA代码的编译器nvcc(CUDA也有自己的语言,代码需要编译才能执行)和debug工具等。简单言之,可以将CUDA Toolkit视为开发CUDA程序的工具包。需要自己下载安装。此外,在安装CUDA Toolkit时,还可以选择是否捆绑安装NVIDIA Graphics Drivers显卡驱动,因此就可以简略我们的步骤。

- cuDNN:基于CUDA Toolkit,专门针对深度神经网络中的基础操作而设计基于GPU的加速库。需要自己下载安装,其实所谓的安装就是移动几个库文件到指定路径。

使用情形判断

仅仅使用PyTorch

在只使用torch的情况下,不需要安装CUDA Toolkit和cuDNN,只需要显卡驱动,conda或者pip会为我们安排好一切。

安装顺序应该是:NVIDIA Graphics Drivers->PyTorch

使用torch的第三方子模块

需要安装CUDA Toolkit。

在安装一些基于torch的第三方子模块时,譬如tiny-cuda-nn、nvdiffrast、simple-knn。如果没有安装CUDA Toolkit,torch/utils/cpp_extension.py会报错如下:

File "....../torch/utils/cpp_extension.py", line 1076, in CUDAExtension

library_dirs += library_paths(cuda=True)

File "....../torch/utils/cpp_extension.py", line 1203, in library_paths

if (not os.path.exists(_join_cuda_home(lib_dir)) and

File "....../torch/utils/cpp_extension.py", line 2416, in _join_cuda_home

raise OSError('CUDA_HOME environment variable is not set. '

OSError: CUDA_HOME environment variable is not set. Please set it to your CUDA install root.

这个报错的意思是找不到CUDA的环境变量路径。这个环境变量是只有安装了CUDA Toolkit之后才会设置的。

这个报错在仅仅使用pytorch时没有影响,因为pytorch在安装时已经准备好了一切,不需要CUDA环境变量。但是,我们现在需要安装其他子模块,就必须要解决这个问题了。

对于做深度学习的研究者,使用其他子模块是经常会碰到的,因此,笔者建议直接安装CUDA Toolkit,在安装CUDA Toolkit的时候捆绑安装显卡驱动。

因此,安装顺序应该是:NVIDIA Graphics Drivers(可跳过,在安装CUDA Toolkit的时候捆绑安装)->CUDA Toolkit->PyTorch->cuDNN

安装NVIDIA Graphics Drivers(可跳过)

前言

在安装CUDA Toolkit的时候可以选择捆绑安装NVIDIA Graphics Drivers显卡驱动。因此,这一步完全可以跳过,但笔者依旧先写出来。

Linux

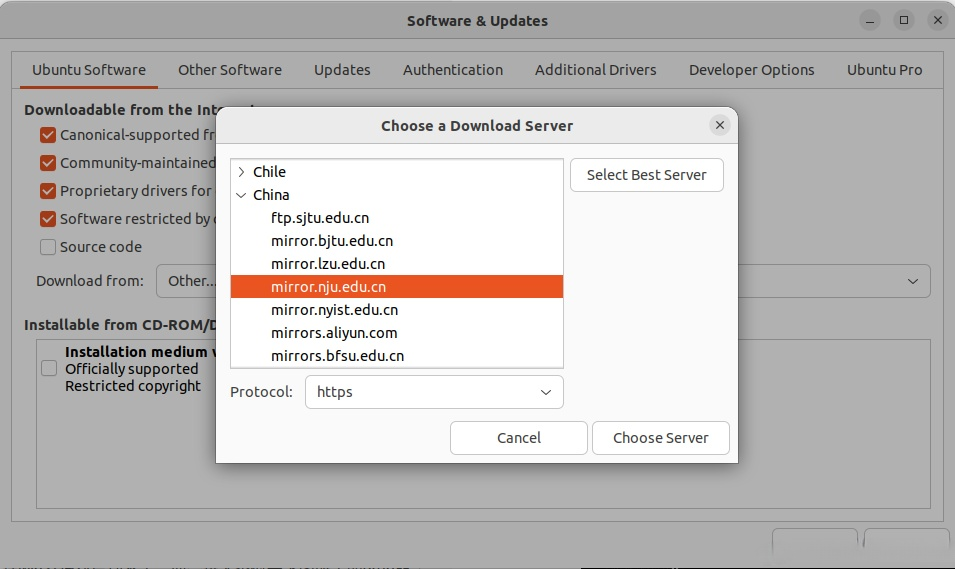

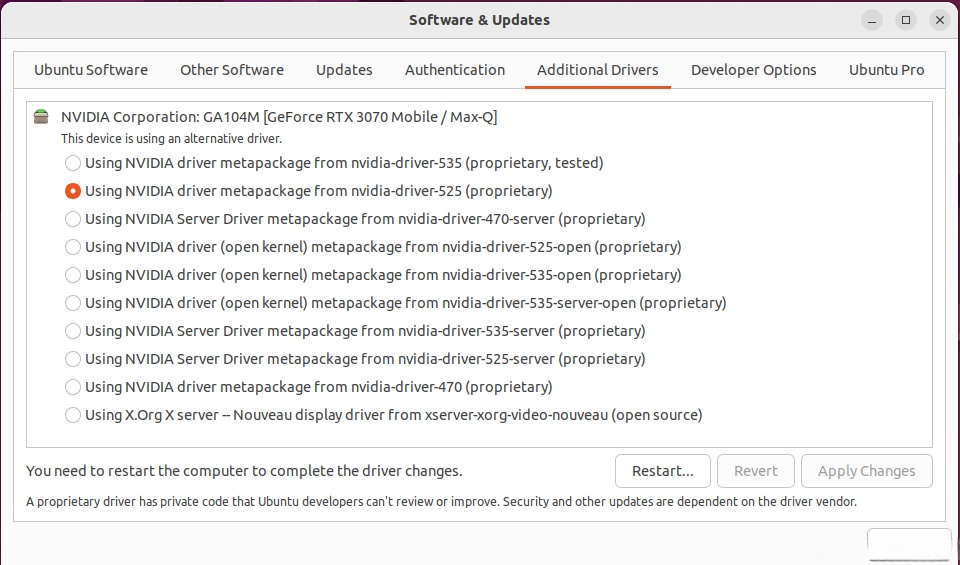

法一:图形化界面安装(推荐)

换好源之后更新升级。必须要升级。否则,安装的n卡驱动是无法生效的!而且,下次重启进入Linux之后,连图形化界面都不会出现!!

sudo apt update sudo apt upgrade

安装必要依赖。必须要安装gcc、g++、cmake。否则,安装的n卡驱动是无法生效的!而且,下次重启进入Linux之后,连图形化界面都不会出现!!

sudo apt install gcc cmake sudo apt install g++

然后直接下载安装即可:

法二:手动下载文件后命令行安装(不推荐)

笔者没有使用过此方法,也不推荐此方法。在能用图形化界面的情况下就不要多此一举了。

windows

法一:GeForce Experience自动安装

去NVIDIA官网下载GeForce Experience,安装好GeForce Experience之后可以在这个应用里面直接下载最新的驱动。

法二:手动安装

在同样的页面手动搜索对应型号的显卡驱动,下载安装。

检验安装

nvidia-smi

如果有类似下面的输出,那么NVIDIA Graphics Drivers就已经安装了:

Sat Jan 27 14:35:37 2024

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.147.05 Driver Version: 525.147.05 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 On | N/A |

| N/A 35C P5 23W / 115W | 1320MiB / 8192MiB | 13% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 3719 G /usr/lib/xorg/Xorg 489MiB |

| 0 N/A N/A 3889 G /usr/bin/gnome-shell 53MiB |

| 0 N/A N/A 4218 C+G fantascene-dynamic-wallpaper 406MiB |

| 0 N/A N/A 8052 G gnome-control-center 2MiB |

| 0 N/A N/A 8397 G ...--variations-seed-version 282MiB |

| 0 N/A N/A 13242 G ...RendererForSitePerProcess 59MiB |

| 0 N/A N/A 47273 G ...--variations-seed-version 18MiB |

+-----------------------------------------------------------------------------+

安装CUDA Toolkit

查看显卡驱动版本情况

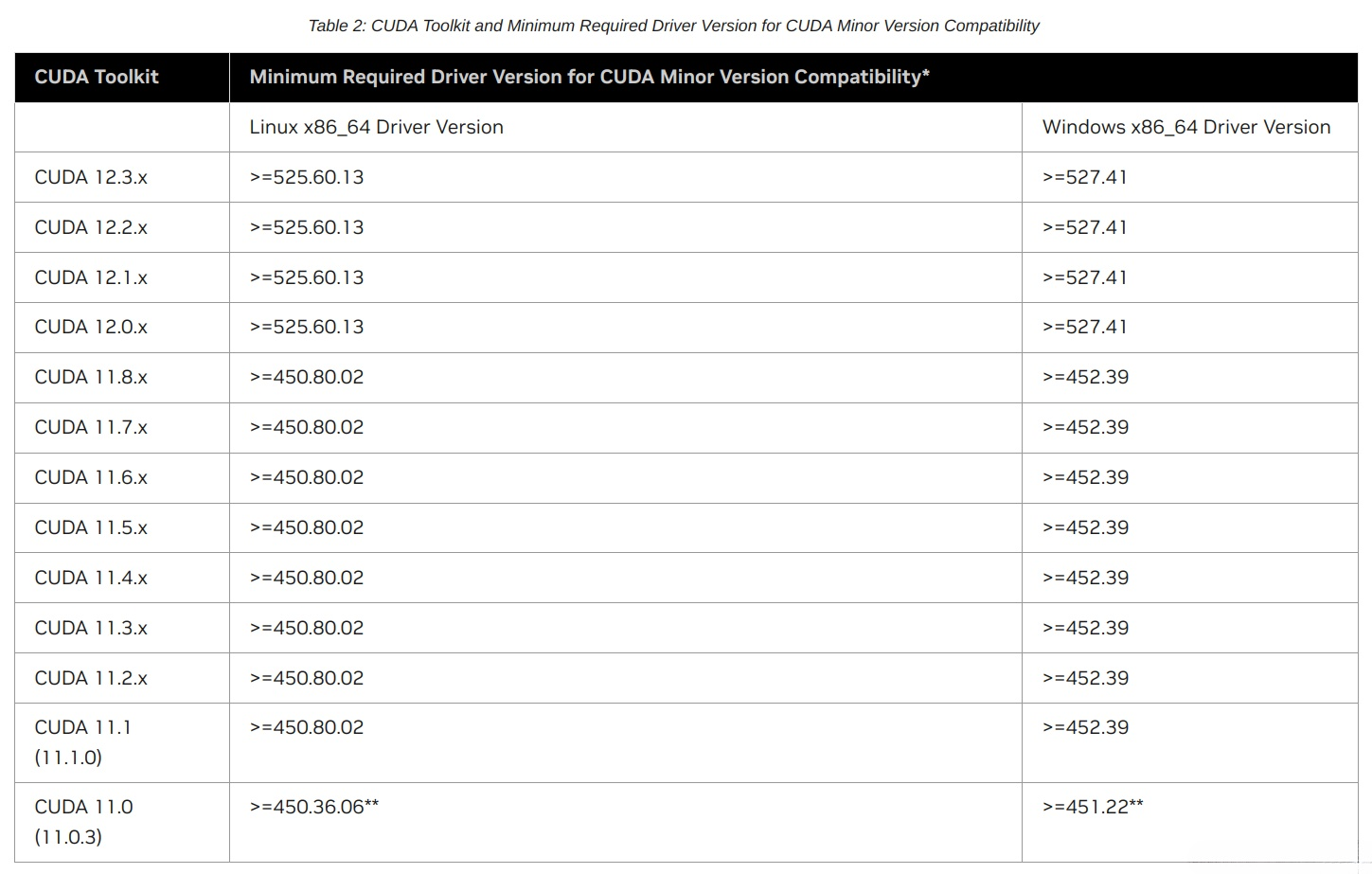

CUDA Toolkit对刚刚安装的显卡驱动有版本要求,具体可以去此处查询。2024.1查询的关系如下:

如果你跳过了安装显卡驱动的步骤,那么你就下载一个最新的CUDA Toolkit好了,它会捆绑安装适配的显卡驱动。

如果你已经安装了显卡驱动,那么可以键入如下指令查询自己的显卡驱动版本:

nvidia-smi

可以在下面看到我的版本是525.147.05:

Sat Jan 27 14:35:37 2024

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.147.05 Driver Version: 525.147.05 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 On | N/A |

| N/A 35C P5 23W / 115W | 1320MiB / 8192MiB | 13% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 3719 G /usr/lib/xorg/Xorg 489MiB |

| 0 N/A N/A 3889 G /usr/bin/gnome-shell 53MiB |

| 0 N/A N/A 4218 C+G fantascene-dynamic-wallpaper 406MiB |

| 0 N/A N/A 8052 G gnome-control-center 2MiB |

| 0 N/A N/A 8397 G ...--variations-seed-version 282MiB |

| 0 N/A N/A 13242 G ...RendererForSitePerProcess 59MiB |

| 0 N/A N/A 47273 G ...--variations-seed-version 18MiB |

+-----------------------------------------------------------------------------+

Linux

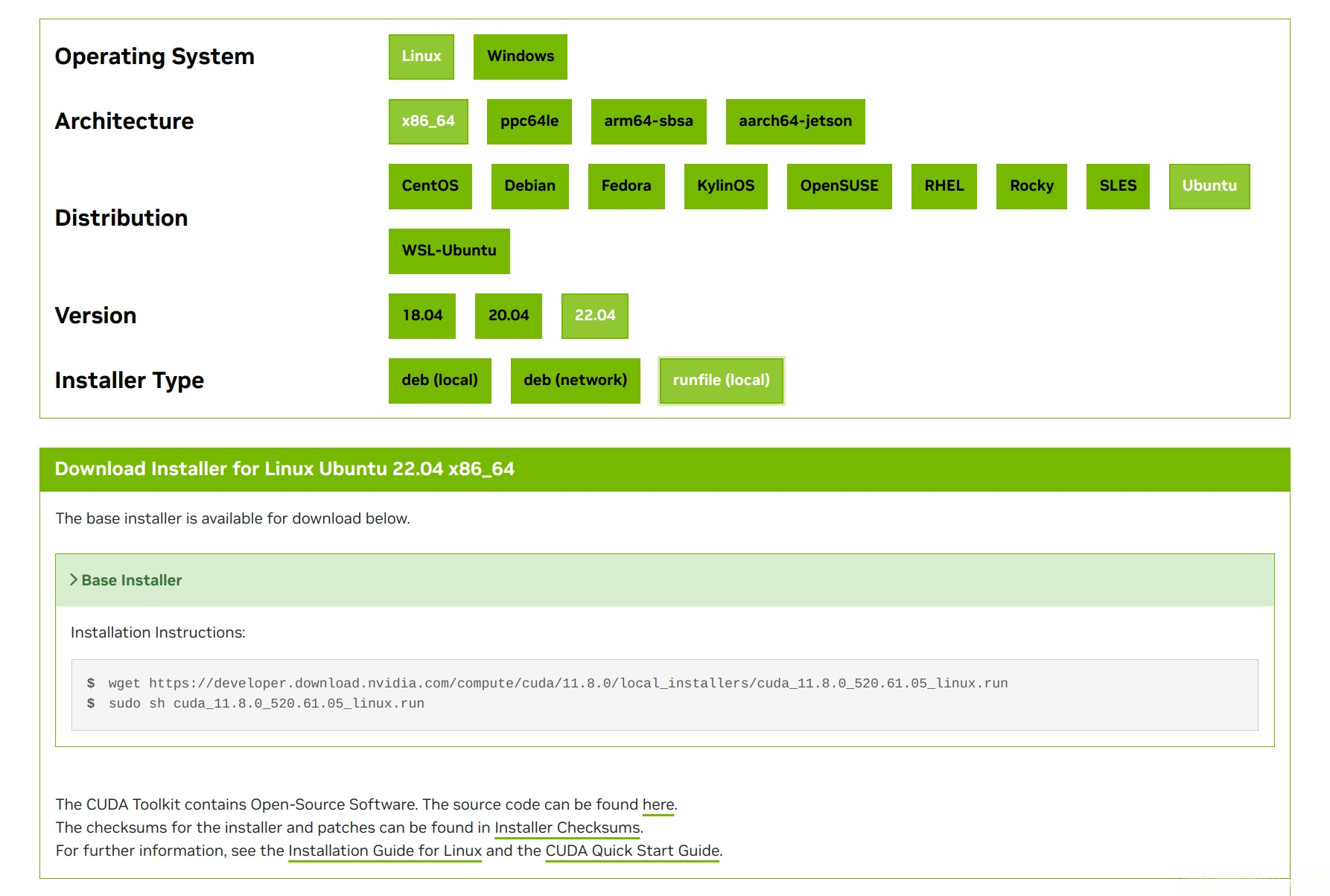

进入官网选择合适的版本。然后根据自己的设备一步步选择安装即可。

完成选择之后,应该有类似界面。根据官网的步骤一步一步来即可。

根据官网步骤,可以看到给出了三种安装方式。笔者在安装的时候先尝试了第二种,官网步骤如下:

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/cuda-keyring_1.0-1_all.deb sudo dpkg -i cuda-keyring_1.0-1_all.deb sudo apt-get update sudo apt-get -y install cuda

然后在第三步报错如下:

han@ASUS-TUF-Gaming-F15-FX507ZR:~$ sudo apt-get -y install cuda Reading package lists... Done Building dependency tree... Done Reading state information... Done Some packages could not be installed. This may mean that you have requested an impossible situation or if you are using the unstable distribution that some required packages have not yet been created or been moved out of Incoming. The following information may help to resolve the situation: The following packages have unmet dependencies: libnvidia-common-525 : Conflicts: libnvidia-common libnvidia-common-545 : Conflicts: libnvidia-common nvidia-kernel-common-525 : Conflicts: nvidia-kernel-common nvidia-kernel-common-545 : Conflicts: nvidia-kernel-common E: Error, pkgProblemResolver::Resolve generated breaks, this may be caused by held packages.

根据提示信息,是因为我现在已经有libnvidia-common-525和nvidia-kernel-common-525,无法再安装libnvidia-common和nvidia-kernel-common,需要更换现有的软件包。理论上,这个问题有两个解决方案:

- 替换软件包

sudo apt-get remove libnvidia-common-525 nvidia-kernel-common-525 sudo apt-get install libnvidia-common nvidia-kernel-common

- 放弃

apt,使用aptitude安装

sudo aptitude install cuda

笔者这里都没有尝试,而是改成了官网的另外一种安装方式:

wget https://developer.download.nvidia.com/compute/cuda/11.8.0/local_installers/cuda_11.8.0_520.61.05_linux.run sudo sh cuda_11.8.0_520.61.05_linux.run

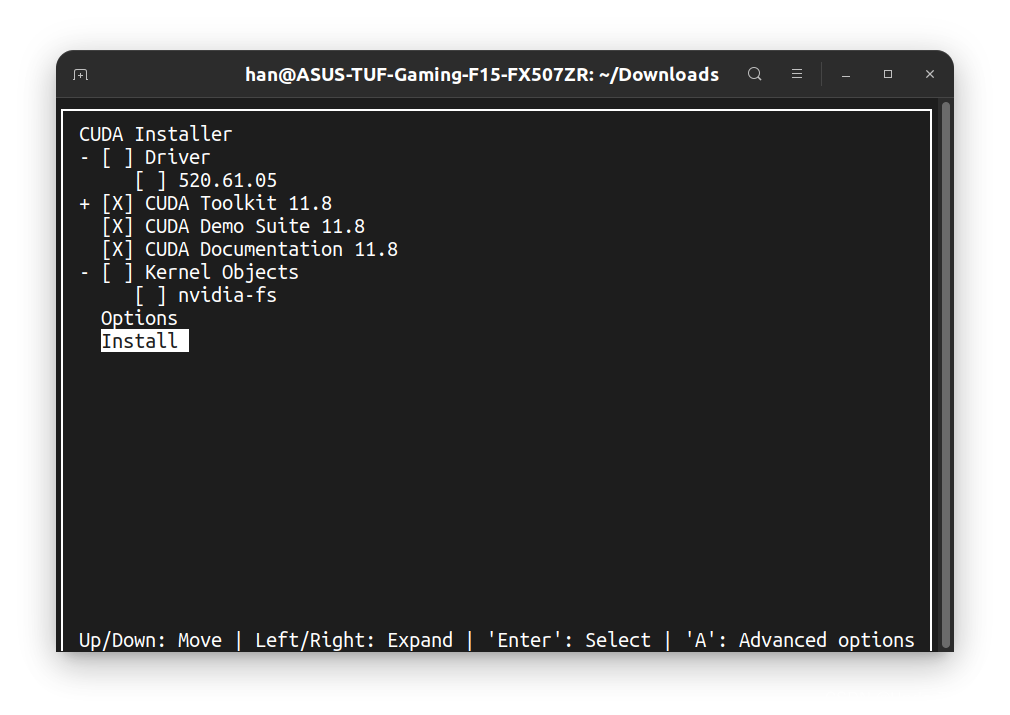

执行这个run程序之后会解压一段时间,要有一些耐心,然后就会有安装引导,一路yes之后来到这里:

- 注意点1:如果跳过了显卡驱动安装的,这里就勾选第一个

Driver。笔者已经安装了显卡驱动,自然就不用再勾选Driver了。然后安装即可。 - 注意点2:如果这里勾选

Kernel Objects,会导致安装不成功。笔者暂时不清楚原因,可能是因为已经安装了显卡驱动的原因。总之,这里不要勾选Kernel Objects。

笔者在选择Install之后的安装过程中还出现dkms未安装报错,于是sudo apt install dkms,再次尝试安装,就成功了,然后出现:

===========

= Summary =

===========

Driver: Not Selected

Toolkit: Installed in /usr/local/cuda-11.8/

Please make sure that

- PATH includes /usr/local/cuda-11.8/bin

- LD_LIBRARY_PATH includes /usr/local/cuda-11.8/lib64, or, add /usr/local/cuda-11.8/lib64 to /etc/ld.so.conf and run ldconfig as root

To uninstall the CUDA Toolkit, run cuda-uninstaller in /usr/local/cuda-11.8/bin

***WARNING: Incomplete installation! This installation did not install the CUDA Driver. A driver of version at least 520.00 is required for CUDA 11.8 functionality to work.

To install the driver using this installer, run the following command, replacing <CudaInstaller> with the name of this run file:

sudo <CudaInstaller>.run --silent --driver

Logfile is /var/log/cuda-installer.log

根据提示,我们添加环境变量:

echo "export LD_LIBRARY_PATH="/usr/local/cuda/lib64:\$LD_LIBRARY_PATH"" >> ~/.bashrc echo "export PATH="/usr/local/cuda/bin:\$PATH"" >> ~/.bashrc

然后安装就完成了。

Windows

win系统下比较简单,进入官网选择合适的版本,直接下载exe可执行程序,进入引导安装即可。

- 注意点:同样根据自己是否安装过显卡驱动来勾选要不要装显卡驱动。

环境变量会自动设置好,不需要手动设置。安装程序会自动添加以下CUDA_PATH_V11_8和CUDA_PATH这2个环境变量:

CUDA_PATH_V11_8=C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.8 CUDA_PATH=C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.8

安装程序还会自动在Path环境变量中添加以下2项:

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.8\bin C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.8\libnvvp

检验安装

重新打开一个终端查看:

nvcc -V

应该有如下类似的输出:

nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2022 NVIDIA Corporation Built on Wed_Sep_21_10:33:58_PDT_2022 Cuda compilation tools, release 11.8, V11.8.89 Build cuda_11.8.r11.8/compiler.31833905_0

版本切换

Linux

cuda的软链接位于/usr/local/,输入如下命令查看:

ls -l /usr/local/

应该有类似如下的输出:

han@ASUS-TUF-Gaming-F15-FX507ZR:~$ ls -l /usr/local/ total 40 lrwxrwxrwx 1 root root 21 1月 27 16:43 cuda -> /usr/local/cuda-11.8/ drwxr-xr-x 17 root root 4096 1月 27 16:44 cuda-11.8 drwxr-xr-x 2 root root 4096 8月 9 2022 etc drwxr-xr-x 2 root root 4096 8月 9 2022 games drwxr-xr-x 2 root root 4096 8月 9 2022 include drwxr-xr-x 2 root root 4096 1月 27 16:38 kernelobjects drwxr-xr-x 3 root root 4096 1月 22 15:26 lib lrwxrwxrwx 1 root root 9 1月 22 14:10 man -> share/man drwxr-xr-x 3 root root 4096 1月 23 21:52 Qt-5.6.3 drwxr-xr-x 2 root root 4096 8月 9 2022 sbin drwxr-xr-x 8 root root 4096 1月 23 22:09 share drwxr-xr-x 2 root root 4096 8月 9 2022 src

可以看到现在的cuda是链接到了我刚刚安装的cuda-11.8.一台设备可以安装不同的CUDA Toolkit版本,想要切换版本,只需要改变这个软链接即可。

假如我还有一个CUDA Toolkit12.0,可以用如下指令切换:

ls -snf /usr/local/cuda-12.0/ /usr/local/cuda

Windows

修改之前自动添加的CUDA_PATH环境变量到对应目录:

CUDA_PATH=C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.0

修改之前自动添加到Path环境变量下的那两个项目:

C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.0\bin C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v12.0\libnvvp

Linux卸载CUDA Toolkit

再次回顾安装完成后的summary:

===========

= Summary =

===========

Driver: Not Selected

Toolkit: Installed in /usr/local/cuda-11.8/

Please make sure that

- PATH includes /usr/local/cuda-11.8/bin

- LD_LIBRARY_PATH includes /usr/local/cuda-11.8/lib64, or, add /usr/local/cuda-11.8/lib64 to /etc/ld.so.conf and run ldconfig as root

To uninstall the CUDA Toolkit, run cuda-uninstaller in /usr/local/cuda-11.8/bin

***WARNING: Incomplete installation! This installation did not install the CUDA Driver. A driver of version at least 520.00 is required for CUDA 11.8 functionality to work.

To install the driver using this installer, run the following command, replacing <CudaInstaller> with the name of this run file:

sudo <CudaInstaller>.run --silent --driver

Logfile is /var/log/cuda-installer.log

可以知道只需要输入类似的指令:(自行更改版本号)

sudo /usr/local/cuda-11.8/bin/cuda-uninstaller

安装PyTorch

查看显卡驱动的CUDA支持版本情况

同样是这个指令:

nvidia-smi

可以在下面看到我的最大支持的CUDA版本是12.0:

Sat Jan 27 14:35:37 2024

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 525.147.05 Driver Version: 525.147.05 CUDA Version: 12.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 On | N/A |

| N/A 35C P5 23W / 115W | 1320MiB / 8192MiB | 13% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 3719 G /usr/lib/xorg/Xorg 489MiB |

| 0 N/A N/A 3889 G /usr/bin/gnome-shell 53MiB |

| 0 N/A N/A 4218 C+G fantascene-dynamic-wallpaper 406MiB |

| 0 N/A N/A 8052 G gnome-control-center 2MiB |

| 0 N/A N/A 8397 G ...--variations-seed-version 282MiB |

| 0 N/A N/A 13242 G ...RendererForSitePerProcess 59MiB |

| 0 N/A N/A 47273 G ...--variations-seed-version 18MiB |

+-----------------------------------------------------------------------------+

下载pytorch

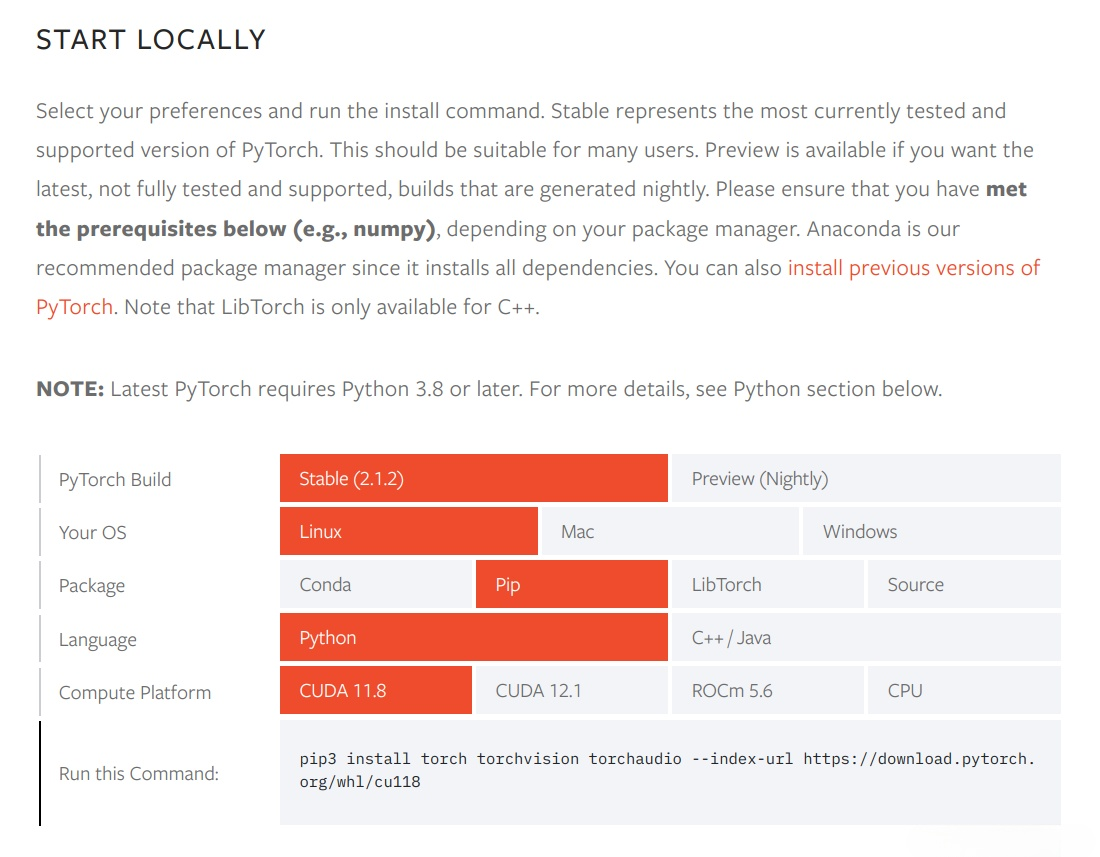

打开pytorch的官网,输入对应自己设备环境的pytorch安装指令即可。着重注意刚刚安装的显卡驱动的可支持CUDA的最高版本,我们选择的pytorch的CUDA版本要低于显卡驱动的可支持CUDA的最高版本。例如,我刚刚查询到我的显卡驱动可支持CUDA最高版本为12.0,那么我就选择11.8,如下图这样:

当然也可以选择conda安装。

安装cuDNN

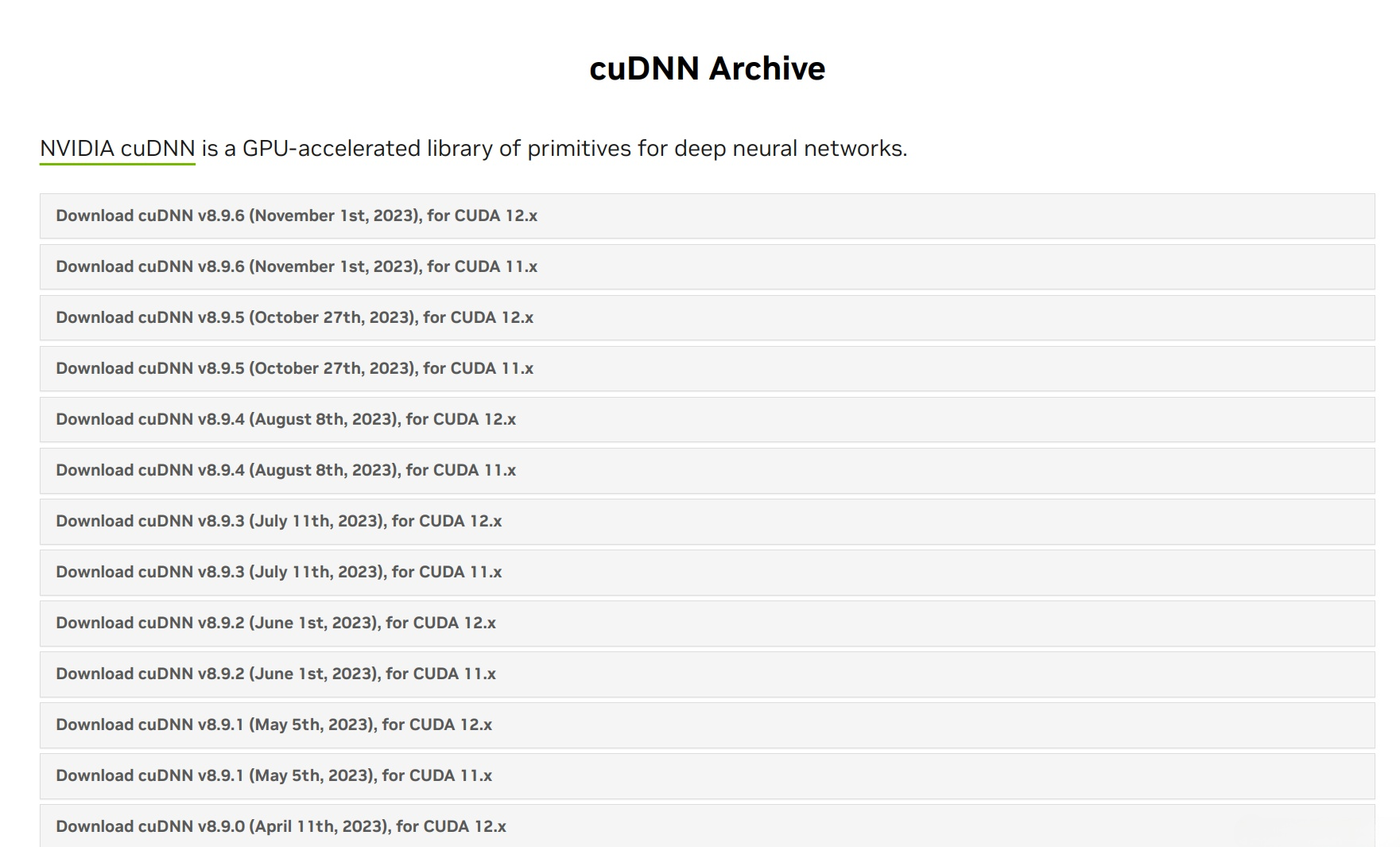

cuDNN对已经安装的CUDA版本有要求。进入官网,选择合适的版本,界面如下:

下载即可。安装的官方文档在这里。

Linux

按官方文档,先安装依赖:

sudo apt-get install zlib1g

法一:下载tar压缩包解压(推荐)

下载好之后解压缩:

tar -xvf cudnn-linux-*-archive.tar.xz

然后复制文件并赋予权限就完成了:

sudo cp cudnn-*-archive/include/cudnn*.h /usr/local/cuda/include sudo cp -P cudnn-*-archive/lib/libcudnn* /usr/local/cuda/lib64 sudo chmod a+r /usr/local/cuda/include/cudnn*.h /usr/local/cuda/lib64/libcudnn*

法二:下载deb包安装(不推荐)

deb包安装反而要复杂一些。

- 下载好之后dpkg安装一下:

sudo dpkg -i cudnn-local-repo-*.deb

- 导入GPG key并更新:

sudo apt-get install libcudnn8=x.x.x.x-1+cudaX.Y

这里的x和y根据自己的版本自己调整

- 再安装几个依赖:

sudo apt-get install libcudnn8=x.x.x.x-1+cudaX.Y sudo apt-get install libcudnn8-dev=x.x.x.x-1+cudaX.Y sudo apt-get install libcudnn8-samples=x.x.x.x-1+cudaX.Y

这里的x和y也自己的版本略微调整

Windows

Windows只有解压缩安装的方式。下载并解压缩好zip文件,然后复制库文件如下:

- 复制

bin\cudnn*.dll到C:\Program Files\NVIDIA\CUDNN\v8.x\bin。 - 复制

include\cudnn*.h到C:\Program Files\NVIDIA\CUDNN\v8.x\include。 - 复制

lib\cudnn*.lib到C:\Program Files\NVIDIA\CUDNN\v8.x\lib。

然后修改PATH环境变量,在其中添加一个项目:

C:\Program Files\NVIDIA\CUDNN\v8.x\bin

检验安装

执行/usr/local/cuda/extras/demo_suite/deviceQuery,应该有以下类似输出:

./deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: "NVIDIA GeForce RTX 3070 Laptop GPU"

CUDA Driver Version / Runtime Version 12.0 / 11.8

CUDA Capability Major/Minor version number: 8.6

Total amount of global memory: 7952 MBytes (8337752064 bytes)

(40) Multiprocessors, (128) CUDA Cores/MP: 5120 CUDA Cores

GPU Max Clock rate: 1560 MHz (1.56 GHz)

Memory Clock rate: 7001 Mhz

Memory Bus Width: 256-bit

L2 Cache Size: 4194304 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 1536

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: Yes

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 1 / 0

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 12.0, CUDA Runtime Version = 11.8, NumDevs = 1, Device0 = NVIDIA GeForce RTX 3070 Laptop GPU

Result = PASS

执行/usr/local/cuda/extras/demo_suite/bandwidthTest,应该有以下类似输出:

[CUDA Bandwidth Test] - Starting... Running on... Device 0: NVIDIA GeForce RTX 3070 Laptop GPU Quick Mode Host to Device Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(MB/s) 33554432 12499.4 Device to Host Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(MB/s) 33554432 12843.0 Device to Device Bandwidth, 1 Device(s) PINNED Memory Transfers Transfer Size (Bytes) Bandwidth(MB/s) 33554432 384586.5 Result = PASS NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

本文由博客一文多发平台 OpenWrite 发布!

-

Nacos多环境配置学习入门12-27

-

Nacos快速入门学习入门12-27

-

Nacos快速入门学习入门12-27

-

Nacos配置中心学习入门指南12-27

-

Nacos配置中心学习入门12-27

-

Nacos做项目隔离学习入门12-27

-

Nacos做项目隔离学习入门12-27

-

Nacos初识学习入门:轻松掌握服务发现与配置管理12-27

-

Nacos初识学习入门:轻松掌握Nacos基础操作12-27

-

Nacos多环境配置学习入门12-27

-

阿里云ECS学习入门:新手必看教程12-27

-

阿里云ECS新手入门指南:轻松搭建您的第一台云服务器12-27

-

Nacos做项目隔离:简单教程与实践指南12-27

-

阿里云ECS学习:新手入门指南12-27

-

Nacos做项目隔离学习:新手入门教程12-27