C/C++教程

创建一个Pod背后etcd的故事

在Kubernetes架构中,Etcd作为Kubernetes的元数据存储,在创建一个Pod时,Etcd背后是如何工作,接下来会通过具体的案例进行一步一步机进行分析!

Etcd 存储概念

kubernetes 资源存储格式

/registry/deployments/default/nginx-deployment /registry/events/default/nginx-deployment-66b6c48dd5-gl7zl.16c35febf6e3c38c /registry/events/default/nginx-deployment-66b6c48dd5-gl7zl.16c35fec1db96fed /registry/events/default/nginx-deployment-66b6c48dd5-gl7zl.16c35fec1fdba240 /registry/events/default/nginx-deployment-66b6c48dd5-gl7zl.16c35fec249adf98 /registry/events/default/nginx-deployment-66b6c48dd5-k55k7.16c35edbfa8784f8 /registry/events/default/nginx-deployment-66b6c48dd5-w9br6.16c35eeb78bfa621 /registry/events/default/nginx-deployment-66b6c48dd5-w9br6.16c35eeba0a4694f /registry/events/default/nginx-deployment-66b6c48dd5-w9br6.16c35eeba259230a /registry/events/default/nginx-deployment-66b6c48dd5-w9br6.16c35eeba7251388 /registry/events/default/nginx-deployment-66b6c48dd5-w9br6.16c35fda25f0af06 /registry/events/default/nginx-deployment-66b6c48dd5.16c35eeb788d245f /registry/events/default/nginx-deployment-66b6c48dd5.16c35febf6bb59a0 /registry/events/default/nginx-deployment.16c35eeb77fc2ef0 /registry/events/default/nginx-deployment.16c35febf56d6b96 /registry/pods/default/nginx-deployment-66b6c48dd5-gl7zl /registry/replicasets/default/nginx-deployment-66b6c48dd5

Kubernetes 资源在 etcd 中的存储格式由 prefix + “/” + 资源类型 + “/” + namespace + “/” + 具体资源名组成

存储模块

kube-apiserver 启动的时候,会将每个资源的 APIGroup、Version、Resource Handler 注册到路由上。当请求经过认证、限速、授权、准入控制模块检查后,请求就会被转发到对应的资源逻辑进行处理。

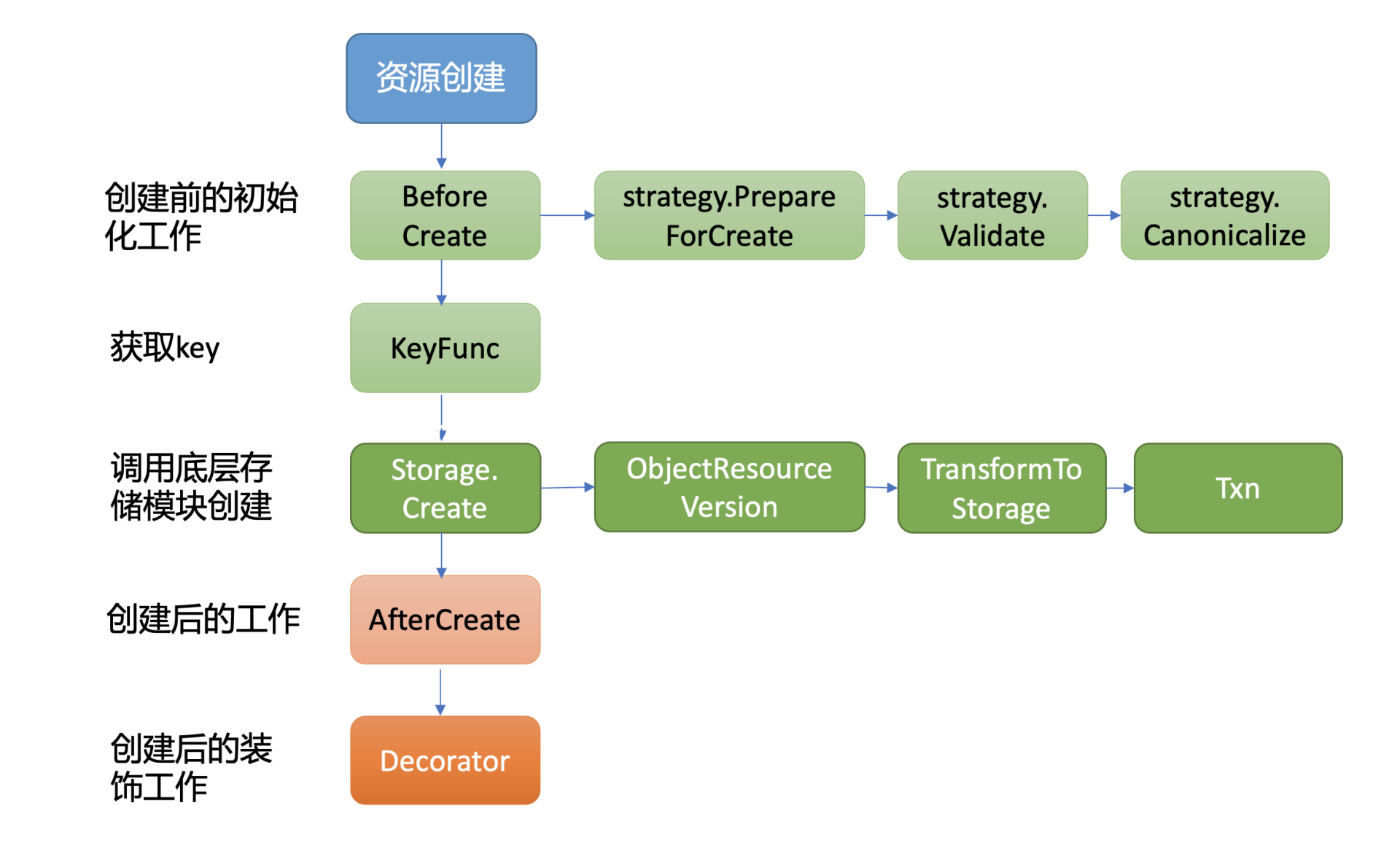

同时,kube-apiserver 实现了类似数据库 ORM 机制的通用资源存储机制,提供了对一个资源创建、更新、删除前后的 hook 能力,将其封装成策略接口。当你新增一个资源时,你只需要编写相应的创建、更新、删除等策略即可,不需要写任何 etcd 的 API。

从图中你可以看到,创建一个资源主要由 BeforeCreate、Storage.Create 以及 AfterCreate 三大步骤组成 ;

当收到创建 nginx Deployment 请求后,通用存储模块首先会回调各个资源自定义实现的 BeforeCreate 策略,为资源写入 etcd 做一些初始化工作。下面是 Deployment 资源的创建策略实现,它会进行将 deployment.Generation 设置为 1 等操作。

// PrepareForCreate clears fields that are not allowed to be set by end users on creation.

func (deploymentStrategy) PrepareForCreate(ctx context.Context, obj runtime.Object) {

deployment := obj.(*apps.Deployment)

deployment.Status = apps.DeploymentStatus{}

deployment.Generation = 1

pod.DropDisabledTemplateFields(&deployment.Spec.Template, nil)

}

执行完 BeforeCreate 策略后,它就会执行 Storage.Create 接口,也就是由它真正开始调用底层存储模块 etcd3,将 nginx Deployment 资源对象写入 etcd。

资源安全创建及更新

etcd 提供了 Put 和 Txn 接口给业务添加 key-value 数据,但是 Put 接口在并发场景下若收到 key 相同的资源创建,就会导致被覆盖。

因此 Kubernetes 很显然无法直接通过 etcd Put 接口来写入数据。

etcd 事务接口 Txn,它正是为了多 key 原子更新、并发操作安全性等而诞生的,它提供了丰富的冲突检查机制。

Kubernetes 集群使用的正是事务 Txn 接口来防止并发创建、更新被覆盖等问题。当执行完 BeforeCreate 策略后,这时 kube-apiserver 就会调用 Storage 的模块的 Create 接口写入资源。1.6 版本后的 Kubernete 集群默认使用的存储是 etcd3,它的创建接口简要实现如下:

// Create implements storage.Interface.Create.

func (s *store) Create(ctx context.Context, key string, obj, out runtime.Object, ttl uint64) error {

......

key = path.Join(s.pathPrefix, key)

opts, err := s.ttlOpts(ctx, int64(ttl))

if err != nil {

return err

}

newData, err := s.transformer.TransformToStorage(data, authenticatedDataString(key))

if err != nil {

return storage.NewInternalError(err.Error())

}

startTime := time.Now()

txnResp, err := s.client.KV.Txn(ctx).If(

notFound(key),

).Then(

clientv3.OpPut(key, string(newData), opts...),

).Commit

从上面的代码片段中,我们可以得出首先它会按照我们介绍的 Kubernetes 资源存储格式拼接 key。

然后若 TTL 非 0,它会根据 TTL 从 leaseManager 获取可复用的 Lease ID。Kubernetes 集群默认若不同 key(如 Kubernetes 的 Event 资源对象)的 TTL 差异在 1 分钟内,可复用同一个 Lease ID,避免大量 Lease 影响 etcd 性能和稳定性。

其次若开启了数据加密,在写入 etcd 前数据还将按加密算法进行转换工作。最后就是使用 etcd 的 Txn 接口,向 etcd 发起一个创建 deployment 资源的 Txn 请求。

Resource Version

Resource Version 是 Kubernetes API 中非常重要的一个概念,顾名思义,它是一个 Kubernetes 资源的内部版本字符串,client 可通过它来判断资源是否发生了变化。同时,你可以在 Get、List、Watch 接口中,通过指定 Resource Version 值来满足你对数据一致性、高性能等诉求。

- 未指定 ResourceVersion,默认空字符串。kube-apiserver 收到一个此类型的读请求后,它会向 etcd 发出共识读 / 线性读请求获取 etcd 集群最新的数据。

- ResourceVersion=“0”,赋值字符串 0。kube-apiserver 收到此类请求时,它可能会返回任意资源版本号的数据,但是优先返回较新版本。一般情况下它直接从 kube-apiserver 缓存中获取数据返回给 client,有可能读到过期的数据,适用于对数据一致性要求不高的场景。

- ResourceVersion 为一个非 0 的字符串。kube-apiserver 收到此类请求时,它会保证 Cache 中的最新 ResourceVersion 大于等于你传入的 ResourceVersion,然后从 Cache 中查找你请求的资源对象 key,返回数据给 client。基本原理是 kube-apiserver 为各个核心资源(如 Pod)维护了一个 Cache,通过 etcd 的 Watch 机制来实时更新 Cache。当你的 Get 请求中携带了非 0 的 ResourceVersion,它会等待缓存中最新 ResourceVersion 大于等于你 Get 请求中的 ResoureVersion,若满足条件则从 Cache 中查询数据,返回给 client。若不满足条件,它最多等待 3 秒,若超过 3 秒,Cache 中的最新 ResourceVersion 还小于 Get 请求中的 ResourceVersion,就会返回 ResourceVersionTooLarge 错误给 client。

Etcd交互分析

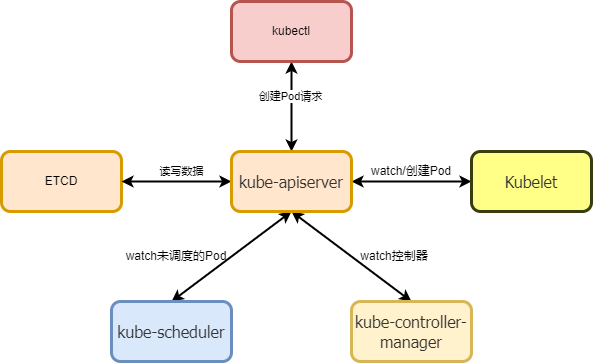

Kubernetes组件概述

- kube-apiserver,负责对外提供集群各类资源的增删改查及 Watch 接口,它是 Kubernetes 集群中各组件数据交互和通信的枢纽。kube-apiserver 在设计上可水平扩展,高可用 Kubernetes 集群中一般多副本部署。当收到一个创建 Pod 写请求时,它的基本流程是对请求进行认证、限速、授权、准入机制等检查后,写入到 etcd 即可。

- kube-scheduler 是调度器组件,负责集群 Pod 的调度。基本原理是通过监听 kube-apiserver 获取待调度的 Pod,然后基于一系列筛选和评优算法,为 Pod 分配最佳的 Node 节点。

- kube-controller-manager 包含一系列的控制器组件,比如 Deployment、StatefulSet 等控制器。控制器的核心思想是监听、比较资源实际状态与期望状态是否一致,若不一致则进行协调工作使其最终一致。

- etcd 组件,Kubernetes 的元数据存储。

- kubelet,部署在每个节点上的 Agent 的组件,负责 Pod 的创建运行。基本原理是通过监听 APIServer 获取分配到其节点上的 Pod,然后根据 Pod 的规格详情,调用运行时组件创建 pause 和业务容器等。

首先我们通过 kubectl create -f nginx.yml 命令创建 Deployment 资源,发送请求到kube-apiserver。

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

$ kubectl create -f nginx.yml

deployment.apps/nginx-deployment created

kube-apiserver会接收到创建 nginx deployment 资源的请求日志,并通过 Txn 接口成功将数据写入到 etcd 后,kubectl create -f nginx.yml 命令就执行完毕,返回给 client 了

# etcd 收到创建 nginx deployment 资源的请求日志: 2021-12-23 11:49:16.320328 D | etcdserver/api/v3rpc: start time = 2021-12-23 11:49:16.319917473 +0000 UTC m=+416.447615057, time spent = 386.445µs, remote = 127.0.0.1:45596, response type = /etcdserverpb.KV/Txn, request count = 1, request size = 1769, response count = 0, response size = 41, request content = compare:<target:MOD key:"/registry/deployments/default/nginx-deployment" mod_revision:0 > success:<request_put:<key:"/registry/deployments/default/nginx-deployment" value_size:1715 >> failure:<>

解释:

- 请求的模块和接口,KV/Txn;

- key 路径,/registry/deployments/default/nginx-deployment,由 prefix + “/” + 资源类型 + “/” + namespace + “/” + 具体资源名组成;

- 安全的并发创建检查机制,mod_revision 为 0 时,也就是此 key 不存在时,才允许执行 put 更新操作。

kube-controller-manager通过Watch机制,快速感知到新建Depolyement 资源后,进入一致性协调逻辑,创建 ReplicaSet 控制器。

# etcd 收到创建 ReplicaSet 资源的请求日志: 2021-12-23 11:49:16.331340 D | etcdserver/api/v3rpc: start time = 2021-12-23 11:49:16.330695394 +0000 UTC m=+416.458392972, time spent = 584.196µs, remote = 127.0.0.1:45564, response type = /etcdserverpb.KV/Txn, request count = 1, request size = 1806, response count = 0, response size = 41, request content = compare:<target:MOD key:"/registry/replicasets/default/nginx-deployment-66b6c48dd5" mod_revision:0 > success:<request_put:<key:"/registry/replicasets/default/nginx-deployment-66b6c48dd5" value_size:1741

kube-controller-manager并会通过通过Watch机制感知到新的ReplicaSet 资源创建后,发起请求创建 Pod,确保实际运行 Pod 数与期望一致。

2021-12-23 11:49:16.353609 D | etcdserver/api/v3rpc: start time = 2021-12-23 11:49:16.353112742 +0000 UTC m=+416.480810323, time spent = 474.04µs, remote = 127.0.0.1:45628, response type = /etcdserverpb.KV/Txn, request count = 1, request size = 1637, response count = 0, response size = 41, request content = compare:<target:MOD key:"/registry/pods/default/nginx-deployment-66b6c48dd5-gl7zl" mod_revision:0 > success:<request_put:<key:"/registry/pods/default/nginx-deployment-66b6c48dd5-gl7zl" value_size:1573 >>

在这过程中产生了 若干 Event,下面是 etcd 收到新增 Events 资源的请求,你可以看到 Event 事件 key 关联了 Lease;

2021-12-23 11:49:16.356776 D | etcdserver/api/v3rpc: start time = 2021-12-23 11:49:16.356368351 +0000 UTC m=+416.484065931, time spent = 388.188µs, remote = 127.0.0.1:45590, response type = /etcdserverpb.KV/Txn, request count = 1, request size = 776, response count = 0, response size = 41, request content = compare:<target:MOD key:"/registry/events/default/nginx-deployment-66b6c48dd5.16c35febf6bb59a0" mod_revision:0 > success:<request_put:<key:"/registry/events/default/nginx-deployment-66b6c48dd5.16c35febf6bb59a0" value_size:689 lease:955464502472756512 >> failure:<>

这时 kube-scheduler 监听到待调度的 Pod,于是为其分配 Node,通过 kube-apiserver 的 Bind 接口,将调度后的节点 IP 绑定到 Pod 资源上。kubelet 通过同样的 Watch 机制感知到新建的 Pod 后,发起 Pod 创建流程即可。

-

怎么切换 Git 项目的远程仓库地址?-icode9专业技术文章分享12-24

-

怎么更改 Git 远程仓库的名称?-icode9专业技术文章分享12-24

-

更改 Git 本地分支关联的远程分支是什么命令?-icode9专业技术文章分享12-24

-

uniapp 连接之后会被立马断开是什么原因?-icode9专业技术文章分享12-24

-

cdn 路径可以指定规则映射吗?-icode9专业技术文章分享12-24

-

CAP:Serverless?+AI?让应用开发更简单12-24

-

新能源车企如何通过CRM工具优化客户关系管理,增强客户忠诚度与品牌影响力12-23

-

原创tauri2.1+vite6.0+rust+arco客户端os平台系统|tauri2+rust桌面os管理12-23

-

DevExpress 怎么实现右键菜单(Context Menu)显示中文?-icode9专业技术文章分享12-23

-

怎么通过控制台去看我的页面渲染的内容在哪个文件中呢-icode9专业技术文章分享12-22

-

el-tabs 组件只被引用了一次,但有时会渲染两次是什么原因?-icode9专业技术文章分享12-22

-

wordpress有哪些好的安全插件?-icode9专业技术文章分享12-22

-

wordpress如何查看系统有哪些cron任务?-icode9专业技术文章分享12-22

-

Svg Sprite Icon教程:轻松入门与应用指南12-21

-

Excel数据导出实战:新手必学的简单教程12-20